Abstract

Although the only curative therapy for chronic myeloid leukemia remains allogeneic stem cell transplantation (allo-SCT), early to mid-term results of imatinib in newly diagnosed patients are sufficiently impressive to have displaced allo-SCT to second- or third-line treatment. Patients now arrive at a decision for transplantation in a variety of disease situations: failing to achieve certain hematological, cytogenetic and molecular milestones by some pre-determined timepoint, having lost a previous best response or by progression to advanced phase. The decision, therefore, is not simply whether to transplant or not, but also how to transplant. Evolving transplant technology requires that the individual circumstances of each patient should be considered when recommending the procedure. Attempts to improve the safety of transplant are generally associated with a reduction in long-term disease control and patient monitoring, and management is life-long. The treatment of recurrent disease is no longer straightforward, with the choices being donor lymphocytes or tyrosine kinase inhibitors alone or in combination. This section will review the evidence supporting some of these decisions and highlight current controversies.

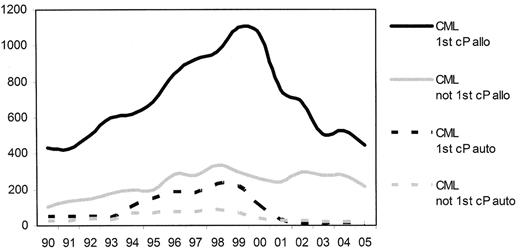

Prior to the introduction of the tyrosine kinase inhibitors (TKI) into clinical practice chronic myelogenous leukemia (CML) in chronic phase was the most common single indication for allogeneic stem cell transplantation (allo-SCT). From 1999 there was a considerable reduction in the numbers of transplants reported to the European Group for Blood and Marrow Transplantation (EBMT) (Figure 1 ).1 This change in activity reflected the increased use of imatinib and antedated any demonstrable survival benefit. Subsequently, of course, the initial impressive results from the use of imatinib in newly diagnosed patients were confirmed in the 5-year follow-up of the IRIS study2 and have resulted in the recommendation to treat all newly diagnosed patients with imatinib unless exceptional circumstances prevail.3 The place of allo-SCT is now as a second-line strategy for patients “failing” therapy with imatinib and with the introduction of the second-generation TKI, the use of allo-SCT may be delayed further in the course of the patients’ disease.

For a patient who satisfies certain predefined criteria for failure of imatinib,3 the current treatment choices include a second-generation TKI, conventional chemotherapy, autologous transplantation or allo-SCT. In attempting to define the optimal timing of allo-SCT it is important to remember that CML, although traditionally considered to be a homogeneous disease due to a single molecular aberration, is highly heterogeneous with respect to individual patients. For instance, the duration of chronic phase can be as short as a few weeks or as long as 20 years, with no apparent differences identifiable at diagnosis between patients with such variable prognoses. It is possible that modern molecular techniques, such as gene expression profiling,4 will help distinguish such patients but until that time we must use surrogate markers to try to predict patients who should receive early allo-SCT and those who might reasonably delay transplant and instead undertake a trial of a second-generation TKI.

Resistance to imatinib is itself a heterogeneous situation. Resistance can be defined as failure to achieve hematological, cytogenetic and/or molecular responses by certain timepoints, loss of a previously established hematological, cytogenetic and/or molecular response, or progression to accelerated phase or blast crisis (BC). It is likely that the mechanisms of these varied forms of resistance are different and that the prognostic implications will also be different. For instance, patients who achieve complete cytogenetic responses have very similar 5-year survivals irrespective of the time taken to achieve such a response, whereas progression to accelerated phase or BC invariably shortens survival.5 Currently there is much speculation that the failure to clear a large tumor burden, e.g., inability to achieve hematological or cytogenetic remission, or the development of increasing tumor load, i.e., loss of a previous response, favor the possibility of genetic instability and hence the occurrence of additional molecular events that predispose to disease progression. If this is true, then it is possible that the second-generation TKI will not induce durable responses and patients suitable for allo-SCT should not have the procedure unduly delayed for an indefinite trial of a TKI.

The decision to proceed to allo-SCT is largely based on a consideration of the balance of risks. Undoubtedly this balance has been tipped in favor of targeted therapy for newly diagnosed patients. However, concurrent with the introduction of imatinib the past seven years have also witnessed significant changes in allogeneic transplant practice. These include the introduction of reduced-intensity conditioning (RIC) regimens or nonmyeloablative transplants with the associated reduction in procedural related mortality. Other improvements include better supportive care which is reflected in the progressive reduction of infection-related deaths. Finally, advances in molecular HLA typing methodologies have led to the outcome of unrelated donor transplantation approaching that achieved in HLA-matched sibling transplantation.6

PredictingTransplant Outcome

The EBMT risk assessment score has proved to be a useful aid in predicting outcome for individual patients.7 However, patients included in that particular analysis were transplanted in the years 1989–1997 and with the improvements in supportive care, HLA-matching and patient selection we would expect outcomes to have improved since that publication. This is indeed the case as demonstrated in a more recent analysis from the EBMT in which 2-year survivals for all risk categories had improved over the previous analysis by 10–15%.8 Patients who were transplanted in first chronic phase since 2000 had overall survivals, transplant-related mortality (TRM) and relapse risks at 2 years of 70%, 26% and 18%, respectively, compared with 59%, 38% and 11% in patients transplanted between 1980 and 1990. There has been a steady improvement in outcome for all patients irrespective of their risk assessment score, but the improvement is most noticeable in those with a score of 0–1, where overall survival and TRM were 54% and 31%, respectively, in the earlier cohort but have risen to 80% and 17% in the later transplants. Improvements in overall survival for patients in acceleration and BC have not been so marked at 40% and 22%, respectively, in the 1980–1990 cohort and 47% and 16% more recently.

Two major obstacles to the success of allo-SCT for all malignant conditions remain, namely transplant-related mortality and morbidity and disease relapse, particularly in patients with advanced-phase disease. Previous attempts to minimize the incidence of either have led to a concomitant increase in the incidence of the other. Peripheral blood–derived stem cells (PBSC) reduce the time to engraftment and may reduce the incidence of early infective complications but lead to more chronic graft-versus-host disease (GVHD). The use of T-cell depletion undoubtedly reduces the risk of GVHD but results in an increase in disease recurrence. GVHD and graft-versus-leukemia (GVL) remain inextricably linked and have so far thwarted all efforts to isolate the individual mechanisms. CML, however, has the advantage over most other hematological malignancies in that it is exquisitely sensitive to allo-immune effects and remission can be restored in most cases of chronic phase disease recurrence by the infusion of donor lymphocyte infusions (DLI).9,10 Response rates to DLI in CML approach 60–90%, and even in relapse into accelerated-phase 30% of patients can be salvaged with DLI alone.11,12 The efficacy of DLI has enabled the development of RIC transplantation.13 Thus, once the decision to proceed to transplant has been taken, there are three important decisions to be made regarding the technique of the procedure: the type of conditioning regimen, the stem cell source and the use of T-cell depletion.

Reduced Intensity versus Myeloablative Conditioning

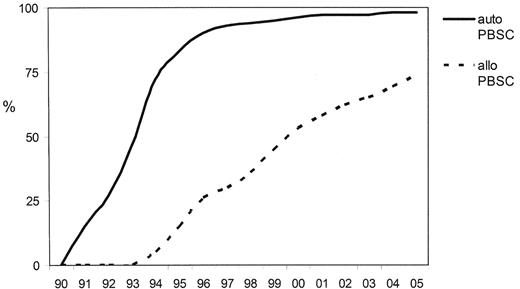

The realization that a considerable component of the curative effect of allo-SCT lay in the allo-immune response led to the introduction of RIC transplants. In 1998 RIC transplantation accounted for fewer than 1% of transplants performed in Europe, but this had increased to 31% in 2003–4 (Figure 2 ).1 In most studies reported to date RICT has resulted in a reduction in TRM and this had enabled the age of patients in whom transplantation can be undertaken at an acceptable risk to be extended by 10–15 years. In comparison to conventional allo-SCT the RICT approach relies more on the GVL effect than on the antitumor effects of chemotherapy to control disease.

Studies of RIC allografts in CML have been limited and the published experience is summarized in Table 1 .14–22 Data comparing outcomes with conventional conditioning are even more limited and are confounded by differences in patient characteristics and the biases of historical controls. The largest series published to date from the EBMT reported the outcomes on 186 patients; 118 were transplanted in first chronic phase, 26 in second chronic phase and 42 in the accelerated or blastic phases. A variety of different conditioning regimens were employed and the majority (72%) received peripheral blood transplants. Disease phase was the most important factor predicting outcome (RR 3.4).20 Analysis of outcomes stratified according to the EBMT transplant risk score suggested that disease-free (DFS) and overall survival rates at 3 years were similar to those achieved with conventional conditioning7 and may be better for high-risk patients. These conclusions need to be interpreted with caution for a number of reasons. First, as mentioned above, the outcome of transplant according to the EBMT risk assessment score was analyzed using patients transplanted between 1989 and 1997, and the outcome of more recent myeloablative transplants (contemporaneously with the introduction of RICT) appears to be considerably better. Second, the predicted disadvantage of RICT is an increase in relapse incidence and this information tends to appear only with longer follow-up.

At present there are no agreed definitions of RIC and a wide range of different RIC regimens exist with very variable degrees of myelosuppression and immunosuppression, including some regimens that would previously have been considered to be myeloablative. It is unclear whether the results with different RIC regimens will be equivalent, and the relative importance of cytotoxic chemotherapy and immune mediated GvL in eradicating disease is uncertain. In the EBMT study the use of fludarabine, busulfan and antithymocyte globulin (Fd/Bu/ATG) appeared to be associated with improvements in DFS (RR 0.7, P = 0.06) and a reduction in TRM (RR 0.4 P = 0.02) but did not impact on overall survival. Fd/Bu/ATG is significantly more myelosuppressive with more nonhematological toxicity than the fludarabine and 2 Gy TBI approach pioneered by the Seattle group. Linked to this is the question whether prior cytoreduction with chemotherapy (including imatinib) influences the outcome of RIC transplants by optimizing the GvL effect. It is possible that some of the more myelo-suppressive regimens such as Fd/Bu/ATG may be sufficient in their own right, whereas some of the least intensive regimens may benefit from the addition of pretransplant therapy.

A second area of controversy is the use of T-cell depletion, most frequently achieved with lymphocyte depleting antibodies such as alemtuzumab and ATG. Their inclusion in the conditioning regimens facilitates engraftment and reduces GVHD. However, this effect comes with the risk of leukemic relapse and delayed immune reconstitution. One strategy adopted to overcome this problem is the preemptive use of DLI post transplant, although the risk of using DLI is related to the time post transplant and the T-cell dose.12 Imatinib is also an option and can be given safely post transplant. In a pilot study of 24 patients receiving RIC transplants at the Hammersmith Hospital, imatinib was given post transplant for 12 months. The imatinib was effective in maintaining molecular remission during administration and allowed the DLI to be delayed to a time where it might be used with a lower risk.23 Several other groups are exploring related protocols.

Stem Cell Source

The history of allogeneic PBSC transplantation mirrors that of RIC in that the switch from bone marrow (BM) to PBSC occurred prior to any evidence to suggest that they confer any benefit and do not incur harm (Figure 3 ). Data from improved engraftment rates in autologous transplantation were extrapolated to allografting, and by the time that the randomized controlled studies of BM versus PBSC indicated the potential adverse effect of increasing the incidence of GVHD, particularly chronic GVHD, without any apparent improvement in overall survival, many centers had abandoned BM harvesting entirely.

Nine prospective randomized studies of sibling PBSC versus BMT for a variety of hematological diseases have been reported. These study groups have recently collaborated to conduct an individual patient data meta-analysis on a total of 1111 patients.24 Unsurprisingly, PBSC were associated with faster engraftment. PBSCT did not result in an increase in grade 2–4 acute GVHD but was associated with more grade 3–4 acute GVHD. Chronic GVHD was also more frequent, including an increase in extensive chronic GVHD. Despite this, the use of PBSC corresponded to improvements in overall, progression-free survival and a reduction in relapse risk in patients with high-risk disease. An a priori subgroup analysis confirmed that this was true for CML specifically with an advantage for PBSC being seen for patients beyond first chronic phase.

With respect to CML, in a joint EBMT-IBMTR retrospective study including all leukemias Champlin et al identified that the outcome of matched sibling transplant for CML in the chronic phase was not affected by stem cell source but that the use of PBSC conferred a survival benefit for transplant for advanced-phase disease.25 The outcome of the patients included in this report has recently been updated to provide longer-term follow-up to 6 years (EBMT-IBMTR data presented at the Tandem Meetings and EBMT 2006). The advantage in DFS previously observed for PBSC in advanced acute leukemia is no longer seen, and DFS for CML in first chronic phase is significantly better with marrow. For patients with advanced CML, leukemia-free survival with peripheral blood remains better. Although this study has all the limitations of retrospective data analyses, these data are important as PBSC have become the preferred source of stem cells in many centers and this report will stimulate a re-examination of this practice.

In an attempt to reduce the risk of GVHD associated with PBSC allo-SCT the Essen transplant group have studied the use of highly purified CD34+ PBSC in chronic phase CML. They compared 32 patients receiving selected cells to 19 patients treated with unmanipulated PBSC and 22 with conventional BM transplants in the setting of HLA-identical sibling grafts. The estimated probability of grade II–IV acute GVHD was 60% for the PBSC group, 37% for the BMT group and 14% for the CD34+ PBSC group. The estimated probability of 3-year survival after transplant was 90% in the CD34+ PBSCT compared with 68% in the PBSCT group and 63% in the BMT group. However, the probabilities for molecular and cytogenetic relapse were 88% and 58% for CD34+ PBSCT and a total of 26 of the 32 patients who received CD34+ PBSCT required additional T cells on a median of two occasions (range 1–7). This suggests that the risks of PBSC in chronic phase CML may be overcome but at the expense of disease recurrence.26

With respect to unrelated donor transplant it is possible that the increased risks of GVHD may negate any benefit of PBSC. Elmaagacli et al compared PBSC with BM in chronic phase CML, and found that the use of PBSC was associated with improved survival (94% vs 66%), owing largely to a decrease in acute TRM (5% vs 30%). The overall prevalence of acute and chronic GVHD was similar between the two groups.27 However, any adverse effects of unrelated donor PBSC over BM in leukemia may only become apparent at a later timepoint, as demonstrated in a retrospective study from the IBMTR of 275 unrelated PBSC and 620 BM allografts. The incidences of acute GVHD (grades II–IV) and chronic GVHD (at 3 years) were 56% and 54% in recipients of PBSC transplantation and 45% and 39% after BMT. There was no difference in the relapse incidence between the two groups. TRM in the first 9 months post transplant was similar in both groups, but for those patients who survived to 9 months the late TRM was higher for PBSC than for BM transplants and this subsequently translated into a poorer survival. The effect was particularly marked in patients deemed to have “good risk” disease at the time of transplant.28

In summary the previously reported advantages of PBSC over BM may not be sustained in the longer term with the higher incidence of chronic GVHD resulting in late deaths with no obvious benefit in terms of preventing disease recurrence. Attempts to abrogate GVHD such as T-cell depletion are likely to result in a higher incidence of relapse and to require additional intervention with donor lymphocyte infusions and/or TKI.

Monitoring Patients after Transplant

The definition of remission of CML has evolved over the last 15 years. It has been recognized for some time that morphological remission of CML is an inadequate endpoint for long-term disease control. In the interferon era cytogenetic remissions were the therapeutic goal and were associated with prolongation in survival. Complete cytogenetic remissions obtained on imatinib are also associated with excellent durability of response.2 After allogeneic transplant complete cytogenetic remission is the norm, and instead the detection of the chimeric BCR-ABL mRNA transcript by RT-PCR is used as a powerful predictor of subsequent leukemic relapse. Increasingly, molecular responses are being used as a surrogate for remission duration with imatinib therapy.29

Early data relating to the incidence and risk of relapse after allografting were derived from qualitative RT-PCR assays for BCR-ABL transcripts. The highest risk of relapse occurred in patients who were BCR-ABL–positive “early” (≤ 12 months) after transplant. Minimal residual disease (MRD) can be detected by RT-PCR for years post-transplant. BCR-ABL has been detected in 25–50% of patients more than 3 years post transplant, with subsequent relapse rates of approximately 10–20%.30 These data suggest that low levels of BCR-ABL transcripts identified some years after transplant for CML may not be an absolute harbinger of relapse.

The predictive value of MRD detection is strengthened by BCR-ABL quantification. Several studies have demonstrated that the molecular burden of BCR-ABL mRNA, and the kinetics of increasing BCR-ABL, predict relapse. Low (or no) residual BCR-ABL was associated with a very low risk of relapse (1%), compared to a 75% relapse rate in patients with increasing or persistently high BCR-ABL levels.31 Olavarria et al studied 138 CML patients “early” (3–5 months) post transplant and showed that the BCR-ABL level was highly correlated with relapse.32 Patients with no evidence of BCR-ABL had a 9% risk of subsequent relapse, whereas patients defined as having a “low” burden of disease (< 100 BCR-ABL transcripts/μg RNA) or “high” level of transcripts (>100 copies/μg) had cumulative relapse rates of 30% and 74%, respectively. The importance of high levels of transcripts early after transplant was recently confirmed by Asnafi et al, who found that patients likely to relapse could be identified as early as day 100 post transplant.33 The presence of residual leukemia late (> 18 months) after transplant is also predictive.34 In a study of 379 CML patients, of 90 patients (24%) who had at least one assay positive for BCR-ABL, 13 (14%) relapsed. Only 3/289 patients who were persistently BCR-ABL–negative relapsed (hazard ratio of relapse = 19).

A recent study from the Hammersmith has further attempted to quantify the risk of relapse. This group has defined disease recurrence as requiring intervention if the BCR-ABL/ABL ratio exceeded 0.02% on three occasions or reached 0.05% on two occasions. Two-hundred forty-three patients were classified by serial quantitative RT-PCR into 4 groups: (1) 36 patients were “durably negative” or had a single low-level positive result; (2) 51 patients had more than one positive result but never more than two consecutive positive results (“fluctuating positive,” low-level (BCR-ABL/ABL ratio not satisfying definition of relapse); (3) 27 patients had persisting low levels of BCR-ABL transcripts but never more than three consecutive positive results (“persistently positive, low level”); and (4) 129 patients relapsed. In 107 of these 129 patients, relapse was based initially only on molecular criteria; in 72 (67.3%) patients the leukemia progressed to cytogenetic or hematologic relapse either prior to or during treatment with DLI. The study not only confirmed the value of their definition of relapse but indicated that the probability of disease recurrence was 20% and 30% in groups 2 and 3, respectively. In this series relapse occurred up to 8 years post transplant confirming the need for long-term monitoring strategies.35

In view of the importance of the detection of residual disease at a time of low tumor burden when DLI are likely to be most effective we recommend monitoring by RT-PCR at intervals no greater than 3 monthly for the first 2 to 3 years post transplant, 6 monthly until 5 years after grafting and annually thereafter. Any patient with a positive result should be monitored more frequently (approximately 4 weekly) until the course of their disease can be defined more precisely. It is essential for each laboratory to establish their own quantitative definition of relapse as it is not possible to extrapolate the results achieved in one institution with those obtained elsewhere. This situation is unsatisfactory but will hopefully benefit from a global attempt at harmonization of RT-PCR standards for the detection of BCR-ABL transcripts.36

Management of Persistent or Relapsed Disease Post Transplant

DLI have become the treatment of choice for patients who relapse after allogeneic SCT, and durable molecular remissions are achieved in the majority of patients relapsing into chronic phase.9,10,12 GVHD and marrow aplasia remain the two most important complications of DLI, but when an escalating dose schedule is used these problems are greatly reduced. In a recent EBMT study, survival after relapse (defined as cytogenetic or hematological relapse) was related to five factors: time from diagnosis to transplant, disease phase at transplant and at relapse, time from transplant to relapse and donor type.37 The effects of individual adverse risk factors were cumulative, so that patients with two or more adverse features had a significantly reduced survival (35% vs 65% at 5 years). Furthermore, DLI was less effective in patients who developed GVHD after transplant. However, for patients transplanted in and relapsing in chronic phase, the efficacy of escalating dose DLI was exceptionally high at > 90%with a 5% procedure-related mortality, rendering DLI the gold standard for the management of relapse in this group.

Imatinib is now an alternative to DLI as it could potentially be used to achieve remission without GVHD and could be effective when DLI has failed. It could also be used in combination with lower doses of DLI to maximize responses while minimizing the risk of GVHD. A number of groups have now used imatinib in the management of patients relapsing after allogeneic transplantation. Most of these patients were treated for relapse into advanced-phase disease, as DLI are of limited value in this situation. Other patients were treated for cytogenetic relapse or hematological relapse into chronic phase, often in the presence of ongoing immunosuppression for GVHD and/or after failure of DLI. Recently, the Chronic Leukemia Working Party of the EBMT has reported a retrospective analysis of 128 patients treated with imatinib for relapse after allogeneic transplant.38 The overall hematological response rate was 84% (98% for patients in chronic phase). The complete cytogenetic response was 58% for patients in chronic phase, 48% for advanced phase and 22% for patients in BC. Complete molecular responses (defined as absence of BCR-ABL transcripts by RT-PCR assays) were obtained in 25 patients (26%) of whom 21 were in chronic phase or advanced phase. With a median follow-up of 9 months the estimated 2-year survivals for chronic phase, advanced phase and BC patients were 100%, 86% and 12%, respectively. Of 79 evaluable patients, 45 (57%) achieved full donor and 11 (14%) mixed chimerism after imatinib therapy. Thus, imatinib appeared to have significant activity against relapsed CML after allogeneic transplant. However, Weisser et al have recently compared the use of DLI or imatinib in 31 patients. Twenty-one patients were treated for disease recurrence (14 with cytogenetic and 7 with hematological relapses) with DLI, and 10 (9 with cytogenetic and 1 with hematological relapse) received imatinib because of lack of availability of the original donor. Complete molecular remissions were observed in 20 of the 21 patients (95%) who received DLI and 7 of 10 (70%) who were given imatinib. However, 6 of the 10 patients treated with imatinib lost their best response whilst receiving the drug. Imatinib had been discontinued in 4 patients with confirmed molecular remission and disease recurred 2–4 months later in all but one of these individuals. Seven of the patients who failed imatinib subsequently received DLI and 6 achieved a molecular remission. Only 3 of the 20 patients who initially responded to imatinib experienced disease relapse. The authors concluded that imatinib, unlike DLI, cannot induce durable response in the majority of patients.39

Of course, future practice will involve patients who have received allo-SCT largely as a consequence of having failed imatinib and perhaps also second-line TKI in which case the efficacy of targeted therapy for disease recurrence will have to be re-established.

In summary, allo-SCT has the potential to be a highly effective treatment for CML responding poorly to imatinib. Careful selection of suitable patients is necessary not only to avoid the high risks of allografting in patients likely to do well with second-generation TKI but also to ensure that patients whose disease is best treated with high-dose therapy gain rapid access to transplantation. Once the decision has been made for transplant, consideration must be given to the best technology for each individual. Follow-up of patients transplanted for CML is life-long and necessitates a program of monitoring for minimal residual disease and prompt intervention with appropriate therapy at the time of confirmation of disease recurrence.

Trends in transplant practice in Europe since 1990—transplant for chronic myeloid leukemia (derived from the EBMT annual activity survey, 2005 data not yet complete).

Trends in transplant practice in Europe since 1990—transplant for chronic myeloid leukemia (derived from the EBMT annual activity survey, 2005 data not yet complete).

Trends in transplant practice in Europe since 1990—changes in conditioning regimens (derived from the EBMT annual activity survey, 2005 data not yet complete).

Trends in transplant practice in Europe since 1990—changes in conditioning regimens (derived from the EBMT annual activity survey, 2005 data not yet complete).

Trends in transplant practice in Europe since 1990—changes stem cell source (derived from the EBMT annual activity survey, 2005 data not yet complete).

Trends in transplant practice in Europe since 1990—changes stem cell source (derived from the EBMT annual activity survey, 2005 data not yet complete).