Abstract

The use of magnetic resonance imaging (MRI) to estimate tissue iron was conceived in the 1980s, but has only become a practical reality in the last decade. The technique is most often used to estimate hepatic and cardiac iron in patients with transfusional siderosis and has largely replaced liver biopsy for liver iron quantification. However, the ability of MRI to quantify extrahepatic iron has had a greater impact on patient care and on our understanding of iron overload pathophysiology. Iron cardiomyopathy used to be the leading cause of death in thalassemia major, but is now relatively rare in centers with regular MRI screening of cardiac iron, through earlier recognition of cardiac iron loading. Longitudinal MRI studies have demonstrated differential kinetics of uptake and clearance among the difference organs of the body. Although elevated serum ferritin and liver iron concentration (LIC) increase the risk of cardiac and endocrine toxicities, some patients unequivocally develop extrahepatic iron deposition and toxicity despite having low total body iron stores. These observations, coupled with the advent of increasing options for iron chelation therapy, are allowing clinicians to more appropriately tailor chelation therapy to individual patient needs, producing greater efficacy with fewer toxicities. Future frontiers in MRI monitoring include improved prevention of endocrine toxicities, particularly hypogonadotropic hypogonadism and diabetes.

Motivation for MRI measurements

Iron overload is a surprisingly common clinical problem, arising from disorders of increased absorption such as hereditary hemochromatosis or thalassemia intermedia syndromes or through frequent blood transfusion therapy. Before the routine availability of chelation therapy, chronically transfused patients died from cardiac iron overload in their teens and twenties.1 Since the introduction of deferoxamine in the early 1970s, life expectancy has improved dramatically.2 However, despite improvements, mortality in middle age continued to be problematic.2,3 There are several reasons that mortality continued to be high. First, deferoxamine is a difficult drug to take for a lifetime and some patients just refused to take it consistently. Second, some patients died because cardiac symptoms and imaging evidence of ventricular dysfunction are late and ominous findings.4 Whereas some patients could be “rescued” by continuous IV deferoxamine therapy, the process took several years and patients who refused to comply with long-term, intensive therapy (20%) died.5 A third reason for continued cardiac mortality was that some older patients developed cardiac symptoms despite apparently adequate control of hepatic iron stores, suggesting insidious occult cardiac iron accumulation. This was particularly puzzling and discouraging to both patients and practitioners after decades of meticulous attention to chelation therapy. Lastly, many centers relied exclusively on serum ferritin to track somatic iron stores. Whereas trends in serum ferritin remain an important monitoring tool, serum ferritin is a poor marker of iron balance because it varies with inflammation, ascorbate status, and intensity of transfusion therapy. Liver iron measurement by biopsy is more accurate but its invasiveness limited routine screening at most institutions.

The desire to replace liver biopsy with a noninvasive test drove the earliest studies on magnetic resonance imaging (MRI) iron quantification6 . Investigators eventually realized that they could also identify cardiac iron in asymptomatic subjects and that signal changes shown with MRI could be used as preclinical “end points” for chelation response.7 Recently, cardiac and liver iron estimates by MRI have become the primary outcome measures for clinical studies of iron chelation therapy.8–10

Theoretical basis of MRI tissue measurements

The use of MRI to estimate tissue iron was conceived in the early 1980s, but did not become practical until MRI technology matured 20 years later.6 The general concept is simple.11 MRI machines can generate images at various observation or “echo” times to vary the contrast among different organs. All organs darken with increasing echo time, but those containing iron darken more rapidly (Figure 1). T2* represents the echo time necessary for a tissue to become twice as dark. It may be thought of as a half-life, with small values representing rapid signal loss. Alternatively, image darkening can be expressed by R2*, its rate of darkening. Some investigators prefer to report R2* values rather than T2* values, because R2* is directly proportional to iron concentration12,13 . However, R2* values are simply 1000/T2* and vice versa, making it easily to convert one representation to another.

(Left) Open circles represent liver signal intensity and solid line reflects the R2* fit at different echo times (TE). (Right) A map generated by calculating R2* values for every voxel in the image, with the reported liver iron concentration (LIC) represent the average liver R2* value scaled by a linear equation.12

(Left) Open circles represent liver signal intensity and solid line reflects the R2* fit at different echo times (TE). (Right) A map generated by calculating R2* values for every voxel in the image, with the reported liver iron concentration (LIC) represent the average liver R2* value scaled by a linear equation.12

MRI scanners can also collect images suitable for T2 (and R2) analysis instead of T2* analysis, using radio waves rather than magnetic gradients to generate images at different echo times. Image analysis and iron quantification is similar whether using R2 or R2* images. R2 images take longer to collect and are used more frequently to evaluate liver iron concentration (LIC).14 Whereas cardiac T2 imaging is also possible, it is more challenging because of respiratory motion, limiting its widespread acceptance.

What does MRI actually measure?

Iron itself is invisible on an MRI. Instead, MRI detects iron's influence on the magnetic milieu of water protons diffusing in tissues. Typically, the magnetic fields in a clinical scanner are extremely homogenous, but iron within the tissues creates local magnetic field disturbances that cause the images to darken faster. Not all forms of iron are equally magnetically potent. Labile iron species, although toxic to the body, are magnetically silent at physiologic concentrations. Ferritin, the body's initial line of defense against circulating free iron, is weakly detectable by MRI when it is dispersed freely in the cytosol.15 However, ferritin aggregates and their breakdown product, hemosiderin, overwhelming determine tissue R2 and R2* (or T2 and T2*). The size and distribution of these iron stores powerfully modulate the relationship between iron concentration and the MRI signal intensity.15,16

Hemosiderin represents the dominant storage form of iron and its levels change quite slowly with time. R2* values are almost entirely determined by hemosiderin concentration, whereas R2 retains some sensitivity to amount of soluble ferritin; newer techniques known as “reduced R2” even attempt to separately estimate the ferritin and hemosiderin pools.17 However, both hemosiderin and soluble ferritin are in dynamic equilibrium with damaging labile iron, thereby representing the “potential” for clinical iron toxicity. This is best demonstrated by the suppression of arrhythmias and the improvement in ventricular function that may be observed using continuous IV deferoxime, which occurs long before the therapy significantly lowers tissue iron stores as shown by MRI.18 However, cessation of therapy before cardiac iron clearance (which often takes years) is associated with a high relapse rate.

MRI is also sensitive to the tissue water concentration. A patient given high-dose diuretics could theoretically experience an increase in their predicted organ R2 and R2* from a concentration effect, even though the total organ iron has not changed. Fluid overload could produce the opposite effect. Fortunately, homeostasis mechanisms prevent major swings in cellular osmality and organ water concentration, but changes in the tissue wet-to-dry weight ratio are partially responsible for small interstudy (5%-7%) fluctuations in R2 and R2* values.

Experimental calibration versus validation

Calibration refers to the mathematical association between MRI measurements and underlying tissue iron concentration. Published iron calibration curves can be found for liver R2,14 liver R2*,12 and cardiac R2* measurements.13

Validation of MRI methods refers to the establishment of their robustness and utility. The greatest advantage of MRI is its overall reproducibility. Interobserver variability is insignificant. Interstudy variability is approximately 5%-7%.19 Variability among scanners, including different manufacturers, is also small.20,21 MRI iron estimates also correspond to those obtained from other monitoring techniques, such as trends in serum ferritin,10 and predict poor clinical outcomes such as heart failure.22,23 Therefore, MRI has become the de facto standard for all recent clinical trials on iron chelation and the clinical standard of care in major thalassemia centers.10,24

In clinical practice, validation is more important than calibration. For inaccessible organs such as the pituitary and pancreas glands, absolute iron concentrations may never be derived; however, the R2 and R2* values associated with glandular dysfunction and destruction are under investigation.25–28

Although most 1.5 Tesla magnets are intrinsically able to perform iron estimation measurements, specialized software and local expertise/training are required for accurate assessment. As a result, some centers have chosen to purchase commercial software or outsource their image analysis to fee-for-service vendors rather than commit the resources to obtain the measurements themselves.

Impact of MRI iron imaging on patient management

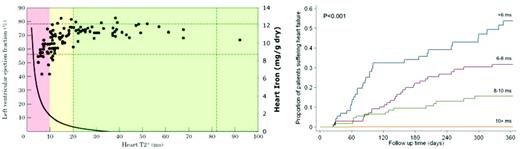

The introduction of MRI to quantitate liver and cardiac iron had a profound impact on our understanding and management of cardiac iron overload. Figure 2 demonstrates the observations of Anderson et al in 2001, which depicts left ventricular ejection fraction (LVEF) against cardiac T2*.22 All patients with cardiac T2* in the normal range (> 20 milliseconds) demonstrated normal ventricular performance. As cardiac T2* decreased below 20 milliseconds (reflecting increasing cardiac iron), the likelihood of left ventricular dysfunction increased; this decline coincided with an increase in the estimated heart iron concentration. However, most patients with MRI-detectable cardiac iron still exhibited normal cardiac function and therefore MRI was able to detect cardiac problems before toxicity was manifested.

(Left) Plot of left ventricular ejection fraction (LVEF) versus cardiac T2*, with colored zones indicating cardiac risk and solid curved line represents cardiac iron (right axis).22,49 (Right) Probability of developing clinical heart failure in one year as a function of initial cardiac T2* value. (Figure reproduced with permission.23 )

(Left) Plot of left ventricular ejection fraction (LVEF) versus cardiac T2*, with colored zones indicating cardiac risk and solid curved line represents cardiac iron (right axis).22,49 (Right) Probability of developing clinical heart failure in one year as a function of initial cardiac T2* value. (Figure reproduced with permission.23 )

At first, practitioners were unsure whether cardiac iron deposition in the absence of any cardiac symptoms warranted escalation of iron chelator therapy. However, registry data from the same group demonstrated that the prospective risk of developing symptomatic heart failure in 1 year was a strong function of initial cardiac T2*, being more than 50% for a T2* < 6 milliseconds (Figure 2 right).23 In contrast, patients having a T2* > 10 milliseconds exhibited a fairly low cardiac risk. Based on these data, many thalassemia practitioners began using a “stoplight” risk paradigm, with T2* < 10 milliseconds considered the red (danger) zone, T2* of 10-20 milliseconds the yellow (cautionary) zone, and T2* > 20 milliseconds the green (safe) zone.

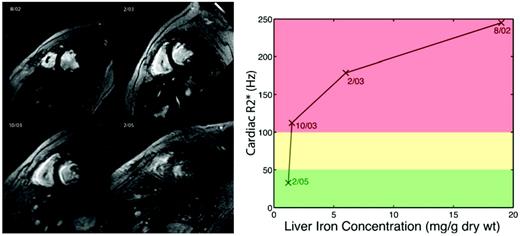

Further insights into iron cardiomyopathy were gained as cardiac T2* became more widely measured. Cardiac iron clearance was found to be exceptionally slow, with a half-life of 13-14 months in response to continuous IV deferoxamine, nearly 4 times slower than hepatic iron removal.18 Figure 3 is a patient example from our institution. The patient presented in August 2002 with an LIC of 19 mg/g, a cardiac T2* of 4 milliseconds, a LVEF of 47%, and ventricular tachycardia. He was placed on intensive, continuous IV deferoxamine (100 mg/kg/d) via a peripherally inserted central catheter. Four months later, his liver iron had declined to 6 mg/g and his LVEF had improved to 53%, but his cardiac T2* was only 5.6 milliseconds. Nine months later, his liver iron (1.5 mg/g) and cardiac function were normal (LVEF 68%), but his cardiac T2* remained dangerously low (8.9 milliseconds). Before the availability of cardiac MRI, this patient probably would have resumed normal subcutaneous deferoxamine, creating the potential for a fatal relapse. This case also illustrates one mechanism by which patients manifest severe cardiac iron deposition despite normal hepatic iron levels.

(Left) Cardiac T2* images depicting changes in heart and liver iron at four time-points over 3 years). (Right) Plot of heart R2* (iron) versus liver iron concentration using color coding corresponding to cardiac risk as in Figure 2.

(Left) Cardiac T2* images depicting changes in heart and liver iron at four time-points over 3 years). (Right) Plot of heart R2* (iron) versus liver iron concentration using color coding corresponding to cardiac risk as in Figure 2.

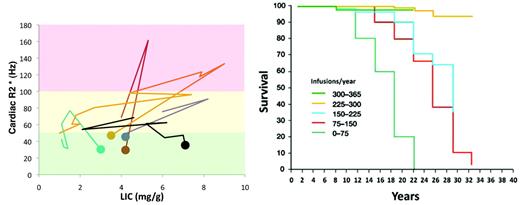

Patients can, however, primarily acquire cardiac iron without interim liver iron overload. We have been measuring cardiac and liver iron in our patients for almost 10 years. In that time, we have had 11 patients develop cardiac iron de novo. Five of these patients had a history of noncompliance, high serum ferritin values, and liver iron estimates ranging from 10.2-41.5 when their cardiac iron became detectable by MRI (T2* < 20 milliseconds). In contrast, 6 patients had liver iron levels generally considered to be acceptable (Figure 4 left panel), ranging from 1.5-9 mg/g dry weight. Before the availability of MRI, silent, indolent cardiac iron accumulation would have continued in these patients until they presented with symptoms. However, with prompt recognition of their cardiac iron buildup, it was relatively easy to clear the iron with modest intensification of chelation therapy. These data demonstrate that whereas poor compliance and high liver iron increases the risk of cardiac iron complications, there is no LIC threshold below which a patient is “safe” from cardiac iron accumulation.31 Therefore, all chronically transfused patients should undergo cardiac T2* assessment if these resources are available.

(Left) Plot of cardiac R2* versus LIC for six patients who developed primary cardiac iron (dot indicates starting value < 50 Hz) despite relative modest LIC burden. (Right) Plot of patient survival as a function of years of chelation therapy in thalassemia major patients (figure reproduced with permission31 ).

(Left) Plot of cardiac R2* versus LIC for six patients who developed primary cardiac iron (dot indicates starting value < 50 Hz) despite relative modest LIC burden. (Right) Plot of patient survival as a function of years of chelation therapy in thalassemia major patients (figure reproduced with permission31 ).

LIC measurements alone have limited predictive value for extrahepatic iron deposition because they are relatively insensitive to patterns of iron chelation therapy. For example, a patient may experience similar changes in liver iron by taking 80 mg/kg of deferoxamine 3 times per week as he or she would taking 40 mg of the drug 6 times per week. However, Gabutti and Piga (Figure 4 right panel) demonstrated that the frequency of deferoxamine administration (not iron balance) was the strongest predictor of survival.32 In their observational cohort, neither longitudinal metrics of liver iron nor serum ferritin predicted survival.

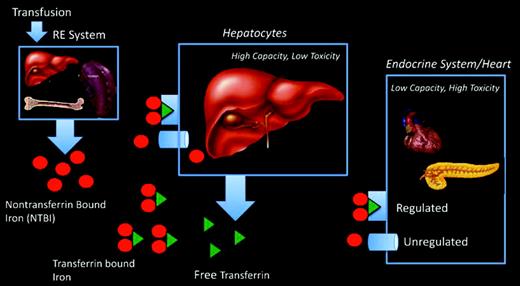

This apparent paradox by which de novo cardiac iron accumulation occurs despite total body iron balance can be explained by the different iron capacity and uptake mechanisms of the heart and liver (Figure 5). Transfusion therapy loads the reticuloendothelial system, which exports iron from scavenged red blood cells as non-transferrin-bound iron (NTBI). Transferrin protein binds most of exported iron and shuttles it for metabolic utilization and storage. The liver is the dominant iron-storage depot for the body, accounting for more than 80% of the total body iron in thalassemia, and has high-capacity mechanisms for clearing both transferrin and NTBI species from the circulation. The liver is also highly accessible to iron chelation, so LICs reflect the overall balance between transfusional iron and grams of chelator taken.33,34 In contrast, the heart and endocrine tissues have tightly regulated transferrin uptake and develop iron overload only when there is circulating NTBI. Because transferrin is nearly always saturated in thalassemia major, NTBI rebounds to high levels whenever iron chelators are not present.35 Therefore, cardiac iron accumulation is determined primarily by hours per week of chelation rather than grams per week of chelator. Anything short of perfect iron chelation compliance creates the potential for cardiac iron accumulation over time.32

Schematic depicting organs responsible for iron cycling and iron uptake in thalassemia major patients.

Schematic depicting organs responsible for iron cycling and iron uptake in thalassemia major patients.

Whereas NTBI is the likely the causal agent for extrahepatic iron deposition, it is not currently a useful clinical monitoring technique because it represents only a single “snapshot” of chelation compliance.36 The ability of cardiac MRI to: (1) detect insidious, preclinical cardiac iron accumulation; (2) ensure completion of cardiac iron removal with intensified therapies; and (3) compare the relative cardiac efficacies of different chelators and chelation strategies, has improved patient safety and comfort at our institution and others. Some investigators also directly attribute improved patient survival in recent years to increasing MRI availability,37 although the introduction of oral chelation and combination therapies certainly plays a role in improved outcomes as well.

MRI assessment of liver iron

Either liver R2 or liver R2* can be used to estimate LIC, depending on local expertise. We routinely perform both and average the results together, but factors such as software availability, radiologist interest/training, and billing considerations often determine what techniques are used clinically. Liver R2* images are the easiest and quickest to collect, but require specialized software to generate R2* and iron estimates. This software can be developed locally by an experienced programmer or may be purchased from commercial vendors. The upper limit of liver iron that can be reliably estimated by R2* depends on scanner specifications, but is generally 30-40 mg/g dry weight at 1.5 Tesla.

Liver R2 can be collected as a series of 7 breath-hold acquisitions or as a 12-minute free-breathing acquisition. Some centers choose to outsource their image processing to a commercial vendor (Ferriscan; Resonance Health) for trained technicians, quality control, and report generation.

Both R2 and R2* values should be converted to liver biopsy equivalents using established calibration curves.12,14 Prospective cardiac risk increases with severe hepatic siderosis.34,38 High liver iron (15-20 mg/g dry weight) damages liver parenchyma and increases circulating NTBI levels dramatically.36,39,40 Therefore, the penalty for chelator noncompliance increases at high LICs.10,36 LIC values below 5 mg/g can facilitate cardiac iron clearance with deferoxamine and deferasirox10,31 ; however, no liver iron can be considered “safe” from a cardiac and endocrine perspective and extrahepatic monitoring by MRI is essential.

MRI assessment of cardiac iron

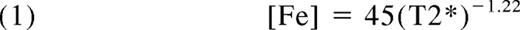

Cardiac R2* (or T2*) is generally measured using the same scanner and software tools as those used for the measurement of liver R2*. It is a little more labor intensive to acquire, but any center with expertise with cardiac scanning should be comfortable planning and executing the examination. Estimates of left ventricular dimensions and function can be obtained at the same time. Cardiac T2* can be converted to cardiac iron concentrations using the following equation13 :

where [Fe] is the cardiac iron concentration in milligrams per gram dry weight and T2* is in milliseconds. Cardiac T2* > 20 milliseconds is considered the lower limit of normal, corresponding to a myocardial iron concentration of 1.16 mg/g. Cardiac T2* values between 10 and 20 milliseconds represent mild to moderate cardiac iron deposition. Patients with a cardiac T2* in this range rarely have heart failure, but chelation should be adjusted to facilitate cardiac iron clearance. Cardiac T2* below 10 milliseconds represents severe cardiac iron loading, with the risk of heart failure increasing sharply as T2* declines.23 Without intensification of therapy, a patient with a T2* < 6 milliseconds has a 50% risk of developing heart failure in 1 year.

MRI assessment of pancreas R2*

Pancreas R2* measurements can readily be obtained using the same tools and techniques used for liver R2*; on some scanners, it is essentially a “free” measurement. Whereas they are not being used in routine clinical practice currently, pancreas R2* values offer complementary information to liver and heart iron estimates.28 The pancreas, like the heart, exclusively loads NTBI. The kinetics of pancreatic iron loading and unloading are intermediate between the heart and liver, making pancreas R2* a better predictor of cardiac iron than liver iron.28 We treat increases in pancreatic R2* as surrogates for chronic NTBI exposure and modify chelation therapy according, even if cardiac and hepatic iron estimates are stable. This “look-ahead” strategy allows for more gentle modifications to iron chelation.

Pancreas R2* values also affect our cardiac MRI monitoring strategies. Because the pancreas loads earlier than heart, a pancreas R2* value < 100 Hz essentially precludes cardiac iron deposition (negative predictive value > 95%) and we follow these patients by abdominal MRI examination only.28 This staged strategy has significantly reduced our MRI burden, particularly for patients with sickle cell disease, in whom cardiac iron overload is rare.

Normal pancreas R2* is < 30 Hz, and values of 30-100 Hz constitute mild pancreatic siderosis, 100-400 Hz moderate siderosis, and values > 400 Hz severe siderosis.28 Both pancreas and cardiac R2* are correlated with glucose intolerance and diabetes.27,41,42 The presence of detectable cardiac iron is a relatively good predictor of overt diabetes, but lacks sensitivity for milder glucose dysregulation.42 Pancreas R2* > 100 Hz is sensitive for all forms of glucose dysregulation (impaired fasting glucose, impaired glucose tolerance, and diabetes), but half of these patients will have normal glucose handling (Noetzli LJ, Mittelman SD, Watanabe RM, Coates TD, and Wood JC, unpublished data). Whether pancreas R2* conveys a prospective risk of subsequent glucose dysregulation requires additional study.

Pancreas R2* measurements have several limitations: (1) they have not gained widespread use, (2) the staged approach to cardiac scanning has not been independently validated, (3) functional correlates require further investigation, and (4) the pancreas may be difficult to locate in older, splenectomized thalassemia major subjects because of glandular apoptosis, fatty replacement, and loss of normal anatomic landmarks. In countries where access to iron chelation has been historically limited, the pancreas may load at a very young age, eliminating its utility as an “early warning system.”27 As a result, we perform cardiac T2* scanning in all patients for whom we cannot reliably estimate pancreas R2*.

MRI in iron overload disorders other than thalassemia

MRI has been most widely used in thalassemia major, but it is also essential in thalassemia intermediate, sickle cell disease, myelodysplasia, and other rare anemias.43,44 The risk of cardiac iron overload varies with the degree of effective erythropoiesis, among other factors, being most common in Diamond-Blackfan syndrome and least common in thalassemia intermedia.43,44 Cardiac and endocrine iron overload occur in sickle cell disease, but they are not particularly common.45–47

Future impact of MRI

With routine cardiac screening, patients are now living long enough to encounter increasing iron-mediated endocrine morbidities. Diabetes, hypothyroidism, and hypogonadism remain common among thalassemia patients2,48 and are probably underdiagnosed. The pituitary gland is perhaps the most important initial target for further study because it is easily injured and damage can be difficult to detect until puberty. Hypogonadism occurs in approximately half of thalassemia patients and has long-term consequences for fertility, bone density, and quality of life.2,48 Preclinical iron deposition can be detected using R2 techniques,26 whereas severe iron deposition is associated with decreased response to gonatropin releasing hormone challenge.26 Shrinkage of the pituitary gland is associated with more significant, irreversible loss of gonadotrophic production.25 Further clinical validation and technical standardization is necessary before pituitary MRI can be incorporated into routine clinical monitoring, but this is an active area of research.49

MRI screening in clinical practice

Chronic packed RBC transfusion therapy increases liver iron by approximately 1 mg/mL (by dry weight) for every 15 mL/kg delivered.33 Therefore, patients receiving more than 10 transfusions (150 mL/kg), in the absence of significant losses, merit at least an initial scan. Cardiac iron loading is rare for patients receiving fewer than 70 units of blood,40 so a screening abdominal examination is a reasonable initial study. Patients with high transfusion load, unknown transfusion burden, or patients with Diamond Blackfan syndrome (which exhibits early cardiac iron loading43 ) may warrant cardiac examination on their initial visit.

Iron measurements should be repeated on an annual basis unless there is a clinical indication for more frequent assessment, such as the use of intensive IV deferoxamine. In patients known to be at high risk of cardiac iron, we obtain liver and cardiac studies during the same imaging session. Patients a cardiac T2* < 10 milliseconds should be evaluated at 6-month intervals given their a priori risk of cardiac decompensation23 ; patients in heart failure should be scanned at 3-month intervals. Children < 7 years of age require sedation and clinicians may consider scanning every other year in this age group if trends in serum ferritin are acceptable.

Disclosures

Conflict-of-interest disclosure: The author is on the board of directors and an advisory committee for Cooley's Anemia Foundation, has consulted for FerroKin BioSciences, has received research funding from Cooley's Anemia Foundation and Novartis, and has received honoraria from Cooley's Anemia Foundation. Off-label drug use: None disclosed.

Correspondence

John C. Wood, MD, PhD, Department of Pediatrics and Radiology, Children's Hospital Los Angeles, MS#34, 4650 Sunset Blvd, Los Angeles, CA 90027; Phone: (323) 361-5470; Fax: (323) 361-7317; e-mail: johncwood@gmail.com.