Abstract

Transplantation is the only known cure for myelodysplastic syndrome (MDS). While some comparative analyses have demonstrated early transplantation to be the preferred strategy for all MDS patients, many of these analyses are biased. Using newly identified prognostic factors and models, a rational approach to transplantation can be undertaken. Factors such as transfusion dependency, cytogenetics, medical comorbidity, and World Health Organization (WHO) histologic subtype should all be considered when deciding on the role of transplantation for the MDS patient. Unresolved issues in transplantation include the impact of pre-transplant tumor debulking with traditional chemotherapeutic agents or the new DNA hypomethylating agents, and the optimal timing of reduced-intensity conditioning transplantation for older patients or for those with medical comorbidities.

Despite the approval of three novel agents for myelodysplastic syndrome (MDS) therapy, allogeneic hematopoietic stem-cell transplantation (HSCT) is the only known curative procedure for MDS. Transplantation for MDS occurs frequently, as MDS is currently the third most common indication for allogeneic HSCT as reported to the Center for International Blood and Marrow Transplantation Research.1 MDS is a disease predominantly of older individuals, and with the increased use and acceptance of reduced intensity conditioning (RIC) into the eighth decade of life, it is anticipated that the number of transplantations for MDS will continue to increase in the coming years. As novel therapies emerge for MDS, it is imperative to determine the optimal role for and timing of HSCT in the MDS patient.

Comparative registry analyses have documented the benefit of transplantation over conventional supportive and disease-modifying therapeutics in the treatment of MDS,2 but this should not be interpreted as an indication for transplantation in all MDS patients. Despite the curative potential of HSCT, transplantation is not undertaken lightly, and careful consideration must be made regarding the appropriateness of each potential transplant recipient and the timing at which transplantation is offered. Despite this, all patients who are potential candidates for transplantation should be referred to a transplantation center early in their disease course so that a discussion regarding the appropriateness of transplantation can occur and a donor search can be initiated when appropriate. This review focuses on appropriate patient selection for HSCT for MDS.

Timing of Transplantation

The timing of transplantation has always been the most controversial topic of discussion for both patients and physicians. Faced with the uncertainty of transplantation outcomes but the certainty of eventual MDS disease progression, decisions are often made based on patient preference. Supporting these decisions are a number of single- and multi-institution experiences that have demonstrated improved outcomes with early transplantation. It is clear that there is inherent bias in these types of analyses, because the patients included are often selected and represent the best transplant candidates by virtue of disease status, overall health, or other unmeasurable factors. This bias, while recognized, is often overlooked because patients who choose early transplantation often identify with those included in the analysis in order to justify their decision.

The majority of analyses that have examined the timing of transplantation for MDS have included patients who underwent myeloablative procedures. For example, two studies presented results of targeted busulfan therapy in patients undergoing related or unrelated donor HSCT and stratified outcomes based on the pre-transplant International Prognostic Scoring System (IPSS) risk score.3,4 While patients were prospectively enrolled in the targeted busulfan treatment program, the decision to undergo transplant was based upon physician and patient preference. As predicted, results were correlated with IPSS stage, and the results suggested that outcomes were improved with transplantation at an earlier stage of disease. There were no relapses among the patients in the lowest-risk IPSS group, whereas the relapse rate was 42% for patients in the highest-risk IPSS group. As a consequence, the 3-year survival rate was 80% in the lowest-risk IPSS group, but was under 30% for patients in the high-risk IPSS group.

de Witte et al. examined outcomes of transplantation for patients with refractory anemia or refractory anemia plus ring sideroblasts5 in 374 patients who underwent transplantation from either matched, sibling, or unrelated donors. Both standard myeloablative and RIC regimens were used, and were assigned by the treating physician in this retrospective review. Unfortunately, fewer than half of the patients had sufficient information available to calculate IPSS risk scores prior to HSCT, and therefore outcomes stratified on this basis were not reliable. Another shortcoming of this analysis was that patients underwent HSCT over a decade-long period, and inherent differences in transplantation technology were evident, with improving overall survival being associated with more recent transplantation. While factors such as conditioning intensity, stem cell source, and donor status did not affect outcome, the authors noted a significant association among year of transplantation, recipient age, and duration of identified MDS diagnosis, which were all important predictors of overall survival. Earlier transplantation was associated with an absolute increase in overall survival rate of 10% at 4 years (57% vs. 47%, p = 0.02), and was associated with improved relapse-free survival in a multi-variable analysis. The authors suggested that earlier transplantation was associated with improved transplantation outcome; however, this should not be interpreted as a recommendation for early transplantation in individuals with low-risk MDS, because the authors did not compare outcomes against a cohort of patients treated with supportive care alone.

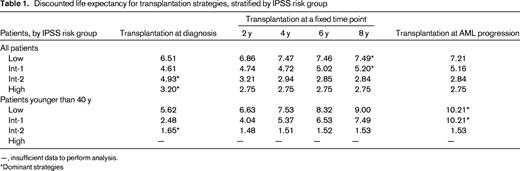

To address the shortcomings of these and other biased retrospective analyses, we generated a Markov decision model to best understand how treatment decisions would affect overall outcome in large cohorts of patients with newly diagnosed MDS.6 The decision model was designed to determine if transplantation at the time of initial diagnosis, delayed a fixed number of years, or at the time of leukemic transformation was the optimal usage strategy for transplantation. Using data from several large, nonoverlapping databases, we demonstrated that the optimal treatment strategy for patients with low- and intermediate-1-risk IPSS disease categories was to delay transplantation until the time of leukemic progression. Immediate transplantation was recommended for patients with high- and intermediate-2-risk IPSS scores (Table 1). These recommendations were stable when adjustments for quality of life were factored in. One of the shortcomings of this analysis was the inability to provide treatment decision guidance at other clinically relevant time points for patients not undergoing immediate transplantation. It is almost certain that other important clinical events such as a new transfusion requirement, recurrent infection, or recurrent bleeding episodes could be considered triggers to move on to transplantation.

Discounted life expectancy for transplantation strategies, stratified by IPSS risk group

—, insufficient data to perform analysis.

*Dominant strategies

In addition to identifying clinically relevant events that might trigger a decision to undergo transplantation, there may be certain subgroups of patients with low- or intermediate-1-risk myelodysplasia in whom early transplantation may offer a survival advantage, and in these patients other prognostic systems may be of some utility.

Newer Prognostic Factors and Scoring Systems

Investigators at the MD Anderson Cancer Center analyzed the outcomes of 865 patients with low- or intermediate-1-risk IPSS disease referred to the center between 1976 and 2005.7 In this model, clinical data collected at the time of referral was used, whereas the IPSS used data collected at the time of patient diagnosis. Using regression techniques, the authors were able to subdivide these ISPP low- and intermediate-1-risk patients based on the degree of thrombocytopenia, patient age, cytogenetics, age, and marrow blast count into three groups of patients with different clinical outcomes. It is possible that the lowest of these risk groups should also proceed to transplantation early after diagnosis, but this has not been tested in a decision-analysis model.

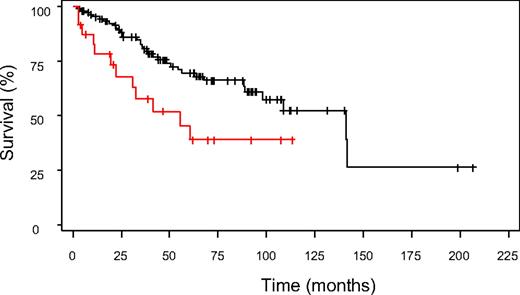

To determine if the clinical factors identified in the MD Anderson model added additional information when applied to the IPSS cohort of patients, we applied this algorithm to patients in the original IPSS cohort. Using the MD Anderson scoring system, 136 low- and intermediate-1-risk patients 18 to 60 years of age could be further subclassified into prognostically relevant subgroups. Collapsing the MD Anderson scores (due to small patient numbers) allowed the identification of two distinct patient subgroups within the low- and intermediate-1-risk IPSS groups. These groups had median survival times of 141.2 and 55.2 months, respectively (p = 0.012, Figure 1, unpublished data). Importantly, these two subgroups were not simply representative of the original low- and intermediate-1-risk IPSS groups. In fact, one-third of the intermediate-1-risk patients were grouped into the higher-risk group with the MD Anderson score, whereas all of the patients classified as low risk by IPSS remained in the low-risk group with the MD Anderson system. Thus, it is possible that some patients with higher-risk intermediate-1 IPSS scores may benefit from earlier, more aggressive intervention, including transplantation, although this also requires formal testing either in a mathematical model or in a prospective clinical trial.

Application of the MD Anderson scoring system to IPSS low- and intermediate-1-risk patients

Application of the MD Anderson scoring system to IPSS low- and intermediate-1-risk patients

The MD Anderson Scoring system includes two gradations of thrombocytopenia (<50 × 109/L and 50–200 × 109/L), but only one classification for anemia (hemoglobin <10 g/dL), which does not reliably capture the red cell transfusion requirement. Transfusion frequency and its sequelae, particularly iron overload, have recently both been demonstrated to be important prognostic factors in MDS transplant outcomes. For example, Platzbecker et al. compared the outcomes of patients who were and were not transfusion dependent at the time of transplantation.8 Even though patients in the transfusion-dependent group had more low-risk features compared with the transfusion-independent group, overall survival was inferior in this group, with a 3-year overall survival rate of 49%, compared with 60% in the transfusion-independent group (p = 0.1).

Because transfusion dependency appears to have prognostic importance, it should be considered in choosing patients for transplantation. As a result of the evolving role that transfusion support plays in determining prognosis, one study examined a cohort of patients stratified by the IPSS classification system to determine the relevance of the World Health Organization (WHO) classification and transfusion requirement among different IPSS groups. The new WHO classification system for myelodysplastic disorders clearly identifies patients into distinct prognostic categories.9 For patients with low- and intermediate-1-risk IPSS scores, the WHO histologic classification scheme was able to significantly differentiate survival among the different histologic subtypes within each IPSS range.9 Patients in higher-risk IPSS categories did not have significantly different survival outcomes when stratified by WHO histology. More importantly, the authors were able to demonstrate a significant effect on survival based on the requirement for transfusion support. As would be expected, outcomes in patients with higher-grade myelodysplasia were affected less by transfusion requirement than were patients with less-advanced WHO histologies. As a result of this demonstration of the impact of transfusion even among WHO-stratified patients, the impact of transfusion requirement was then incorporated into the newer WHO Prognostic Scoring System (WPSS).10 In this system, the impact of a regular transfusion requirement (defined as requiring at least one transfusion every 8 weeks in a 4-month period) was given the same regression weight as progressing between cytogenetic risk groups. In contrast to the initial IPSS publication, which carries prognostic information only at the time of initial MDS diagnosis, this model was time dependent, such that patients could be continually reevaluated by the scoring model and updated prognostic information could be generated at any time point in the patient's treatment course. Thus, the development of new cytogenetic changes or the development of an ongoing transfusion need would add usable information that could help to guide treatment decisions, including those that affect the decision to proceed to transplantation. A formal decision analysis using the WPSS is currently under way (M. Cazzola, personal communication), and the results may supplant the decision analysis based on the IPSS alone. It is also important to note that the WPSS has already been shown to have independent prognostic significance in predicting survival and relapse after allogeneic transplantation.11

If transfusion dependence is an independent risk factor for excess mortality after transplantation, then there must be a measurable effect of this dependence that is independent of disease activity. The result of excess transfusion is the accumulation of stored iron, which is often reflected in the serum ferritin level. At our own center, we reported that an elevated pre-HSCT serum ferritin level was strongly associated with lower overall survival and disease-free survival after transplantation; this association was limited to a subgroup of patients with acute leukemia or MDS and was largely attributable to increased treatment-related mortality.12 Similarly, a prospective single-institution study of 190 adult patients undergoing myeloablative transplantation demonstrated that elevated pre-transplant serum ferritin was associated with increased risk of 100-d mortality, acute graft-versus-host-disease, and bloodstream infections or death as a composite end point.13

Other, more recently described factors may also be important in determining MDS outcome, and may help to guide treatment toward or away from HSCT. For example, Haase et al. recently analyzed individual cytogenetic changes in over 2000 individuals with MDS,14 and identified a number of favorable cytogenetic changes that were associated with median survival times ringing from 32 to 108 months, with some subgroups having survival times that were not reached. In addition, a number of individual cytogenetic changes not addressed in the IPSS were noted to be associated with an intermediate prognosis.

At a finer level, molecular prognostication may hold even more information than cytogenetics. Mills et al. recently described a gene-expression-profile methodology to accurately predict the risk of transition from MDS to acute myeloid leukemia.15 This model was able to accurately differentiate groups of patients with a time to transformation of greater or less than 18 months, and was more powerful at predicting time to leukemic transformation and overall survival when compared with the IPSS scoring system. This gene-expression profile needs to be tested in a prospective trial in which it is used to guide therapy to determine if it truly is effective at preventing adverse survival outcomes associated with leukemic transformation.

Comorbidity and Conditioning Intensity

It is known that comorbidity increases the likelihood of adverse outcomes in medicine and in hematologic malignancies, although the recognition that comorbidity scores may be useful in the latter scenario has only recently been noted.16 Several comorbidity scores have been developed to help provide prognostic information in myeloid malignancies. In MDS, the Hematopoietic Cell Transplantation Comorbidity Index (HCT-CI), developed by Sorror et al.,17 has been shown to carry prognostic value even in patients with MDS not undergoing transplantation.18 Sorror et al. demonstrated that the HCT-CI has specific prognostic utility in a group of patients undergoing transplantation for acute myeloid leukemia and MDS.19 In this analysis, the patients were divided into four separate groups, with each group being defined on the basis of comorbidity and malignant disease risk. Within each of the four risk groups, patients who underwent myeloablative and RIC transplantation were compared. In each of the four groups, the RIC arm experienced a higher rate of relapse; however, the treatment-related mortality rates were all lower than those in the myeloablative arm. As a result, disease-free and overall survival was similar between conditioning arms in all four groups.

The effects of elevated ferritin levels are largely limited to patients undergoing myeloablative transplantation; however, an elevated ferritin level should be considered a measurement of comorbidity. Ferritin levels could therefore influence the decision to pursue full-intensity or RIC transplantation. Optimally, if myeloablative transplantation is planned, proceeding to transplantation prior to the accumulation of a critical amount of iron should be pursued; alternatively, chelation therapy prior to transplantation can be considered, although this latter approach has not yet been demonstrated to be effective in prospective clinical trials.

The consideration of comorbidity prior to transplantation is even more relevant now that RIC transplantation has been shown to be effective in the treatment of MDS (reviewed in Oliansky et al.20 ). There are several analyses that have compared RIC transplantation with conventional myeloablative transplantation, and the majority of them suggest similar outcomes. For example, at our center, the outcomes of 136 patients with MDS or acute myelogenous leukemia were compared when stratified by conditioning regimen intensity.21 Overall survival at 2 years was the same for individuals undergoing RIC transplantation as for those who received myeloablative conditioning (28% vs. 34%; p = 0.89); however, the causes of treatment failure were significantly different, with more relapse in the RIC group (61% vs. 38%; p = 0.02), but higher treatment-related mortality in the myeloablative group (32% vs. 15% at 100 d), as anticipated. A larger analysis with similar results was presented by Martino et al. on behalf of the European Blood and Marrow Transplantation Group.22 Finally, a retrospective Center for International Blood & Marrow Transplant Research (CIBMTR) review of the outcomes of 550 patients age 50 years or older who underwent matched sibling donor transplantation demonstrated no difference in myeloablative and RIC outcomes.1 None of these studies adjusted for comorbidity, and it is almost certain that if reanalyzed, the RIC cohorts would contain a higher proportion of patients with advanced comorbidites. Nonetheless, with similar outcomes, some patients have now opted for earlier RIC transplantation even when they do not have comorbidities, because treatment-related morbidity and mortality after this procedure is lower than after traditional myeloablative transplantation.

The optimal timing of RIC transplantation needs to be addressed in some form of prospective clinical trial or, in the absence of the feasibility of this approach, with mathematical Markov modeling. The issues surrounding the timing of RIC transplantation are more complex now that there are several Food and Drug Administration (FDA)-approved treatment options that can reduce transfusion dependence and even increase overall survival in transfusion-dependent patients.23 Whether these agents should in fact be recommended prior to transplantation is debatable. The reasons for considering these agents are numerous, and include delaying a major procedure such as transplantation and the possibility of a reduction in marrow blasts prior to transplantation. While the latter outcome is attractive, and theoretically should improve outcomes, this has not been demonstrated in unbiased analyses. Since their introduction, several small studies have examined the feasibility of administering these agents prior to transplantation, but thus far, there are no obvious signs of either benefit or adverse consequences.24,25 A formal decision analysis examining the evolving role of transplantation in the context of the availability of DNA hypomethylating therapy has been started through the CIBMTR (Study LK- 0802).

Conclusions

Appropriate patient selection for transplantation is the first step in maximizing outcomes after transplantation for MDS. Because new therapeutics offer significant palliation and provide symptom control, the decision to pursue transplantation is not undertaken lightly. However, for younger patients, in whom an MDS-related death is very likely, early transplantation is generally recommended. Several factors influence the decision to undergo transplantation, and many of these revolve around prognostic indicators that are constantly evolving. The goal of these systems is to identify patients in whom transplantation outcomes are likely to exceed non-transplantation outcomes. This balance is most relevant in older patients in whom RIC transplantation is indicated but for whom the optimal timing of transplantation has not yet been defined.

Disclosures

Conflict-of-interest disclosure: The author declares no competing financial interests. Off-label drug use: None disclosed.

Correspondence

Corey Cutler, MD, MPH, FRCP(C), Assistant Professor of Medicine, Harvard Medical School, Dana-Farber Cancer Institute, 44 Binney St., D1B13, Boston, MA 02115; Phone: (617) 632-5946; Fax: (617) 632-5168; e-mail: corey_cutler@dfci.harvard.edu