TO THE EDITOR:

Venous thromboembolism (VTE), including deep venous thrombosis and pulmonary embolism, is a significant cause of preventable morbidity and mortality,1 with rising incidence due to increased rates of obesity, surgery, and cancer.2 Guidelines advocate for personalized VTE risk assessment,3,4 and current strategies include provider education, medical admission order sets with VTE prophylaxis, and electronic alerts for high-risk patients.5 Despite these guidelines, VTE prophylaxis remains inaccurately used,6,7 and both diagnosis and management of VTE are areas that need improvement.8,9

One underexplored strategy is the use of artificial intelligence (AI) and its subbranch machine learning (ML). AI/ML is flexible and scalable, bypassing traditional statistical assumptions (eg, type of error distribution or proportional hazards assumption), which is advantageous for risk stratification, diagnosis, and survival predictions.10 ML algorithms can also analyze different types of data (ie, laboratory/imaging data and physician notes) to incorporate into predictions.11 Major ML applications in VTE include machine vision for detecting VTE on imaging, natural language processing (NLP) for identifying VTE diagnoses in the medical chart, and predictive modeling for estimating clot risk.12 Although recent meta-analyses show AI/ML tools are effective, their clinical implementation remains limited, requiring further research to bridge this gap.13 We have previously conducted studies to assess clinicians’ acceptability and views with regard to the integration of AI/ML in VTE prevention and management.14 However, successful application of computational technologies requires the inputs of subject matter experts such as informaticians for AI/ML. To harness the expertise of informaticians specializing in AI/ML, interviews were conducted and thematically analyzed for insights on using these tools for VTE.

A survey was conducted from August 2021 to August 2022 to explore informatician attitudes toward using AI for VTE management. A targeted convenience sample was recruited through professional organizations and health informatics funding awardees.15,16 Respondents were invited to submit their email for participation in qualitative interviews, and they recommended other informaticians to interview, with recruitment continuing until thematic saturation. Participants were incentivized with $50 gift cards for their time.

Beth Israel Deaconess Medical Center’s Institutional Review Board deemed the study exempt as nonhuman research from review. An interview guide included scenarios of using NLP to detect VTE cases, machine vision to interpret imaging studies, predictive modeling of clot risk, and generative AI to communicate with patients (supplemental Appendix A). AI was defined broadly as the use of computers to imitate the problem-solving skills of humans.

Researcher A.G. conducted semistructured videoconference interviews. Participants completed a demographic survey in REDCap (Research Electronic Data Capture).17,18 Researchers recorded, transcribed, and coded the interviews in duplicate and met virtually to perform analyses. The codebook had 3 parent codes and 20 total daughter codes (supplemental Appendix B), which included deductive codes (determined a priori) and inductive codes (that emerged from the text). Two coders (A.G. and S.Z.) separately coded each transcript with Dedoose software (version 9.0.17 [2021]; SocioCultural Research Consultants, LLC, Los Angeles, CA). Coders reviewed and discussed the transcripts with each other to ensure intercoder reliability. Differences in coding were resolved by discussion. Researchers adhered to consolidated criteria for reporting qualitative research guidelines (supplemental Appendix C).

Of the 174 survey respondents, 40 informaticians provided their email addresses, and 40 more were recruited through snowball sampling. Ultimately, 24 individuals participated (6 from the survey). Participants included 6 clinician informaticians, 9 data scientists, 3 biomedical/computational biologists, and 6 “others” as an unspecified designation. Among the 13 participants with a clinical focus (including 10 with medical training), hematology was the most common (n = 6), followed by general medicine (n = 4), oncology (n = 2), and pulmonary/critical care (n = 1). The median age was 37 years (interquartile range, 33-45), with 17 males. Fifteen participants had academic affiliations (maximum of 4 from a single institution), and 3 were in medical training. Overall, 15 institutions were represented (8 from the Northeast, 3 from the Southwest, 2 from the Midwest, and 2 from Veterans Affairs).

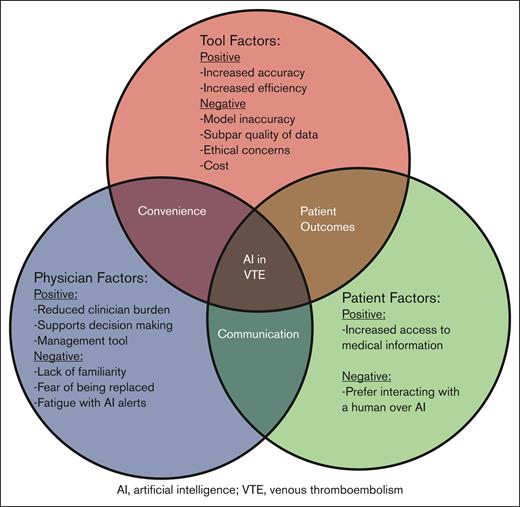

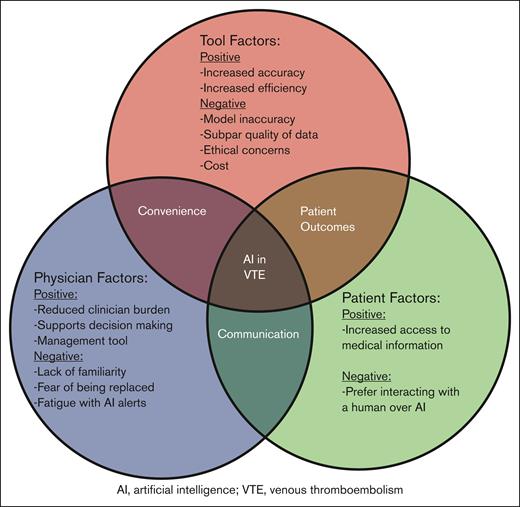

Major themes that emerged were that AI is a powerful tool to reduce clinician burden and is well suited for managing VTE (Table 1). Challenges included ethical concerns, subpar quality of training data, potential for model inaccuracy, and clinician unfamiliarity (Figure 1).

Conceptual framework for the use of AI in prevention and management of VTE. This diagram illustrates the intersecting factors influencing the use of AI in VTE. The 3 primary factors include tool factors, physician factors, and patient factors. Each category highlights both the positive and negative aspects identified by the informaticians.

Conceptual framework for the use of AI in prevention and management of VTE. This diagram illustrates the intersecting factors influencing the use of AI in VTE. The 3 primary factors include tool factors, physician factors, and patient factors. Each category highlights both the positive and negative aspects identified by the informaticians.

Increased accuracy and efficiency were identified as strengths that could potentially decrease clinician workload: “...clinicians are doing the best they can for their patients and balancing a lot of things. So, when risk assessment or other types of clinical decision making can be automated, it can take the load off a physician” (participant 4 [P4]). Clinicians are often faced with multiple demands on their time, and automating the parts of medical practice that do not require critical thinking can be a big advantage. One way this can be achieved is by using AI to extract relevant information from the chart to calculate a risk score instead of the physician searching numerous places in the patient’s chart to abstract that information themselves. Another way this can be achieved is for AI to suggest best practices for management and present the data in the patient chart that support that management plan. These suggestions are not imperatives but can serve as a way for physicians to “double check” their work and ensure that high-quality care is being provided to each patient they care for. This can serve as a means to decrease clinical workloads as well as enhance patient safety and the quality of health care delivery.19 Clinical trials, such as the Mammography Screening with AI trial studying AI-assisted mammography,20 support the themes of increased efficiency and reduced clinician burden with using AI.

Participants also reported that VTE management specifically is an area of medicine that can be managed with AI, given that VTE is a distinct end point readily recorded in the medical chart and incorporated into algorithms. One participant said VTE is “a well-defined problem or entity, so it’s easy to get training data and to predict the outcome” (P13). The literature supports the claim that AI models are accurate, because meta-analyses have shown that they perform very well in VTE prediction and diagnosis,12,21 although ML involvement in these models requires innovation to improve transparency.22

Among the challenges, participants raised concerns about AI’s inconsistent performance across different races, genders, and socioeconomic status, as well as privacy issues related to personal data use. They emphasized the need to inform patients when AI is used in their care, reflecting broader literature on algorithmic fairness and privacy.23 Historical biases exist in the data that are used to train AI, and this can lead to representation gaps that disproportionately affect the medical care of minorities.24 Using inclusive data sets and performing bias audits may help to curtail the negative effects. Independent of inequity, poor quality of training data was also identified as a challenge. For example, a lack of standardization in the vocabulary used in physician notes could affect the performance of NLP tools. Model inaccuracy can be seen through incorrect outputs (“these models do mess up and make up information” [P7]) and overidentification of findings without clinical significance. These mistakes could occur when an AI tool is being asked to provide an answer that is beyond its capabilities or as a consequence of the aforementioned poor training data. Human oversight and using techniques that obligate AI to explain its output could help filter out inaccurate outputs. These themes overlap with those identified in the previous survey study including concerns around data quality, the black box nature, and ethical issues.15 Moreover, it was often difficult to achieve provider compliance in a clinical setting. Alarm fatigue and fear of being replaced were frequently cited. Clinicians were identified as a heterogeneous group, and those who were unfamiliar with AI “can be very skeptical or have a lot of unrealistic expectations” (P18). Prior research shows that physicians who consider AI to be a labor-saving tool are more likely to trust it, whereas physicians who perceive it as a high-risk intervention are less likely.25

Novel ideas emerged that sometimes contradicted the overall themes. Some felt that chatbots are easier to communicate with than humans: “I can see a patient wanting to have conversations about mental health or behavioral health or sexual health with an AI before they would want to necessarily have that face to face conversation with a physician” (P17); and that patients prefer the AI’s convenience: “…patients also don’t like sometimes how their providers take a while to get back to them…(AI is) a synchronous tool that can be accessible whenever they’re ready or whenever they have a question” (P10). Some believed AI could worsen accuracy and efficiency: “nobody knows the right way to use these systems…such that you can provide like bad interactions or…(have) confusing results…it might reduce overall efficiency and it might reduce overall accuracy in terms of the final outcomes of the diagnosis of these treatment plans” (P1). Informaticians perceived that AI could erode physician authority: “I think it could affect the perception negatively of physicians because some patients might feel that, well, a computer can do this so why should I trust a doctor” (P18). Finally, cost was noted as a barrier: “AI is not a cheap thing to do. It’s going to take lots of energy to run all these data centers” (P9).

There were multiple participants who commented on the implementation of AI. Suggestions included rigorous clinical testing: “AI clinical trial...is a very good way to validate your model” (P12). Informaticians also recognized the value of a human provider over AI by assuming the patient perspective: “…when I am sick, I would want a doctor listening to me and being there for me” (P21) and “…if people know it’s AI, it just doesn’t work” (P17). Other researchers have also found that although chatbot responses for health questions were more empathic than physician responses,26 patients prefer human interaction and inherently trust physicians over AI.27

Overall, promoting cross talk between clinicians and informaticians is critical in ensuring the positive aspects of AI can be retained, while the negative aspects are minimized. Interdisciplinary groups in which stakeholders such as physicians, data scientists, and patient representatives can communicate would facilitate this cross talk and enhance implementation.28 Clinicians can provide feedback to informaticians about the nuances of their clinical workflows to develop better AI tools. In turn, informaticians can provide education to clinicians about how to use AI tools effectively and what their limitations are. Governance and regulatory structures could standardize this process on a larger scale and help with resource allocation.29

There are limitations to this study. Informaticians who agreed to participate in the interviews may have a more positive view than those who did not volunteer. Although we attempted to include individuals from diverse backgrounds, different stages of training, and multiple institutions, viewpoints may not be representative of all informaticians. Given that a relatively niche population was included, we relied on self-identification and did not have specific training or educational requirements in AI/ML technology to participate, which introduced heterogeneity in participation. Similarly, for participants with a clinical focus, this varied and included trainees. We believe this reflects the breadth of clinicians involved in VTE management and in fact can help gather diverse but important viewpoints in qualitative research, but we acknowledge that opinions may vary based on training, experience, and specific expertise. Due to the broad definition of AI used, some participants included statistical methods in their discussion, which have different properties than ML. Finally, although informaticians have domain knowledge of AI, they may lack knowledge about the clinical context of VTE.

In conclusion, informaticians felt AI that was validated as safe and effective could be conducive to use in clinical practice, including for VTE specifically. Training and education in AI in the context of a health care setting will be important in the future for compliance and integration into routine health care delivery.

Acknowledgments: This study was supported by the Centers for Disease Control and Prevention (CDC), Atlanta, GA, Cooperative Agreement #DD20-2002.

The findings and conclusions in this report are those of the authors and do not necessarily represent the official position of the CDC.

Contribution: A.G. contributed to data collection and analysis and writing and editing of the manuscript; B.D.L. contributed to study design and editing of the manuscript; S.Z. contributed to data collection and analysis; R.P.R., L.L., L.D., A.A., N.R., K.A., I.V., and J.I.Z. contributed to editing of the manuscript; M.A.S. contributed to qualitative research design and editing of the manuscript; and R.P. contributed to study design and writing and editing of the manuscript.

Conflict-of-interest disclosure: J.I.Z. received prior research funding from Incyte and Quercegen; data safety monitoring board fees from Sanofi and CSL Behring; consultancy fees from Calyx, Incyte, Bristol Myers Squibb, Regeneron, and Med Learning Group; and is supported through Memorial Sloan Kettering Cancer Center Support Grant/Core grant (P30 CA008748). R.P.R. received research funding from Bristol Myers Squibb and Janssen; consultancy/advisory board participation fees from Abbott, Bristol Myers Squibb, Dova, Janssen, Inari, Inquis, and Penumbra; is the National Lead Investigator for Penumbra, STORM-PE trial; and is the President of The Pulmonary Embolism Response Team Consortium. M.A.S. receives grant support from the National Institutes of Health/National Institute of Aging (K24AG071906). R.P. received consultancy fees from Merck Research. The remaining authors declare no competing financial interests.

Correspondence: Rushad Patell, Hematology and Hematologic Malignancies, Beth Israel Deaconess Medical Center, 330 Brookline Ave, Boston, MA 02215; email: rpatell@bidmc.harvard.edu.

References

Author notes

Data are available from the corresponding author, Rushad Patell (rpatell@bidmc.harvard.edu), on request.

The full-text version of this article contains a data supplement.