Key Points

Hazards regarding mortality and leukemic transformation in MDS diminish over time in higher-risk but remain stable in lower-risk patients.

This change of hazard indicates time-dependent attenuation of power of basal risk scores, which is relevant for clinical decision making.

Abstract

In myelodysplastic syndromes (MDSs), the evolution of risk for disease progression or death has not been systematically investigated despite being crucial for correct interpretation of prognostic risk scores. In a multicenter retrospective study, we described changes in risk over time, the consequences for basal prognostic scores, and their potential clinical implications. Major MDS prognostic risk scoring systems and their constituent individual predictors were analyzed in 7212 primary untreated MDS patients from the International Working Group for Prognosis in MDS database. Changes in risk of mortality and of leukemic transformation over time from diagnosis were described. Hazards regarding mortality and acute myeloid leukemia transformation diminished over time from diagnosis in higher-risk MDS patients, whereas they remained stable in lower-risk patients. After approximately 3.5 years, hazards in the separate risk groups became similar and were essentially equivalent after 5 years. This fact led to loss of prognostic power of different scoring systems considered, which was more pronounced for survival. Inclusion of age resulted in increased initial prognostic power for survival and less attenuation in hazards. If needed for practicability in clinical management, the differing development of risks suggested a reasonable division into lower- and higher-risk MDS based on the IPSS-R at a cutoff of 3.5 points. Our data regarding time-dependent performance of prognostic scores reflect the disparate change of risks in MDS subpopulations. Lower-risk patients at diagnosis remain lower risk whereas initially high-risk patients demonstrate decreasing risk over time. This change of risk should be considered in clinical decision making.

Introduction

The myelodysplastic syndromes (MDSs) are clonal hematopoietic stem cell disorders that result in ineffective hematopoiesis in the bone marrow, which is associated with peripheral blood cytopenias and a risk of developing acute myeloid leukemia (AML) in approximately one third of patients.1 Because of the heterogeneous nature and wide range of clinical courses of this disease, classification and prognostication is of paramount importance for disease management. Independent prognostic instruments have been developed over the years2 that function as additional staging systems. Most scoring systems assess prognosis at the time of diagnosis, assuming stable predictability over the disease course. An earlier single-center study has shown moderate loss of prognostic power over time in scoring systems that use clinical parameters, whereas systems that focus on cytogenetics and comorbidity maintained prognostic power.3 Recently, new prognostic scoring systems have been developed that provide improved prognostication for MDS patients.4-7 Previously, the comparison of scores was provided only from the time of diagnosis,7 and the stability of risk over time and the clinical applicability for time points after diagnosis remain unclear.

Therefore, the aim of this multicenter retrospective study was to assess the relative stability of the newly developed scoring systems over time, to compare the observed time-related changes in prognostic power among these systems, and to relate these changes to the time dependence of hazards. These data can then be applied to MDS populations with different risk for their clinical outcomes when evaluated over time after diagnosis and as they relate to their clinical implications.

Patients and methods

Patients

This study is based on 7212 untreated (ie, they did not receive disease modifying treatment during MDS phase; disease-specific treatment was allowed after progression to AML) primary MDS patients from 19 institutional databases comprising the International Working Group for Prognosis in MDS, which generated the revised International Prognostic Scoring System (IPSS-R) for MDS7 under the aegis of the MDS Foundation, Inc., in accordance with institutional review board approvals. Patient characteristics compared well with those in other MDS series: median age was 71 years and 60% of the patients were males. After a median follow-up time of 4.0 years, median overall survival was 3.8 years (range, 0.1-39.75 years; 95% confidence interval, 3.7-4.0 years), and median time to transformation to AML was not reached with 25% of patients transforming to AML after 6.8 years. Patients were diagnosed and classified by using the French-American-British (FAB) and/or World Health Organization (WHO) morphologic classifications; cytogenetic patterns were classified by original IPSS subtypes8 and by the refined proposal that was integrated into the IPSS-R.9

Investigated parameters and scoring systems

First, single-score components (hemoglobin, absolute neutrophil count, platelet count, bone marrow blast percentage, and cytogenetics) and differentiating features for the IPSS-R (age, performance status, ferritin, lactate dehydrogenase, β2-microglobulin, and marrow fibrosis)7 were analyzed for stability of prognostic power over time. Then the following scoring systems were analyzed: IPSS,8 IPSS-R and IPSS-RA (ie, IPSS-R including age),7 the original WHO classification-based Prognostic Scoring System (WPSS) applying transfusion need,4 its modification using hemoglobin thresholds (WPSS 2011)10 and its modification including age (WPSS-A),11 and the Lower-Risk Prognostic Scoring System (LR-PSS).5 For a detailed description and distribution of variables analyzed, see Table 1 and the supplement from Greenberg et al.7 In this analysis, the IPSS-R7 was calculated for all patients (n = 7212), whereas the revised WPSS (WPSS-R)11 was applicable only to patients classified by WHO criteria (n = 5763; ie, missing a portion of patients present in the IPSS-R). Therefore, when comparing scores including WPSS, only WHO-classified patients were analyzed.

Dxy values for specific scores and single clinical predictors

| . | Dxy values for specified minimum observation times after diagnosis (months) . | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Overall survival . | Time to transformation . | |||||||||||

| 0 . | 12 . | 24 . | 36 . | 48 . | 60 . | 0 . | 12 . | 24 . | 36 . | 48 . | 60 . | |

| Scoring system | ||||||||||||

| IPSS | 0.37 | 0.30 | 0.22 | 0.16 | 0.11 | 0.07 | 0.49 | 0.38 | 0.34 | 0.26 | 0.25 | 0.20 |

| IPSS-R | 0.43 | 0.35 | 0.27 | 0.20 | 0.14 | 0.08 | 0.53 | 0.42 | 0.38 | 0.31 | 0.24 | 0.07 |

| IPSS-RA | 0.46 | 0.38 | 0.31 | 0.26 | 0.22 | 0.18 | 0.53 | 0.43 | 0.38 | 0.32 | 0.25 | 0.13 |

| WPSS | 0.44 | 0.36 | 0.29 | 0.25 | 0.18 | 0.21 | 0.59 | 0.48 | 0.39 | 0.35 | 0.30 | 0.22 |

| WPSS 2011 | 0.41 | 0.34 | 0.25 | 0.18 | 0.14 | 0.11 | 0.53 | 0.45 | 0.40 | 0.34 | 0.33 | 0.22 |

| WPSS-A | 0.44 | 0.38 | 0.31 | 0.24 | 0.20 | 0.19 | 0.52 | 0.46 | 0.40 | 0.33 | 0.27 | 0.22 |

| LR-PSS | 0.27 | 0.25 | 0.25 | 0.20 | 0.18 | 0.19 | 0.26 | 0.23 | 0.24 | 0.18 | 0.16 | 0.17 |

| LR-PSS in lower-risk patients | 0.21 | 0.20 | 0.21 | 0.18 | 0.18 | 0.18 | 0.16 | 0.18 | 0.20 | 0.14 | 0.12 | 0.10 |

| IPSS-RA in lower-risk patients | 0.28 | 0.26 | 0.25 | 0.24 | 0.23 | 0.19 | 0.37 | 0.32 | 0.28 | 0.25 | 0.21 | 0.09 |

| IPSS-RA WHO only | 0.48 | 0.40 | 0.34 | 0.28 | 0.23 | 0.20 | 0.55 | 0.47 | 0.40 | 0.35 | 0.28 | 0.15 |

| IPSS-R very-low-/low-/intermediate-risk vs high-/very- high-risk patients | 0.31 | 0.20 | 0.12 | 0.06 | 0.02 | 0.01 | 0.37 | 0.24 | 0.19 | 0.13 | 0.08 | 0.05 |

| IPSS-R-DLH ≤3.5/>3.5 | 0.33 | 0.26 | 0.19 | 0.13 | 0.07 | 0.03 | 0.44 | 0.33 | 0.29 | 0.25 | 0.19 | 0.06 |

| Predictor | ||||||||||||

| Hemoglobin | 0.21 | 0.17 | 0.16 | 0.11 | 0.08 | 0.05 | 0.16 | 0.12 | 0.12 | 0.08 | 0.06 | 0.08 |

| Neutrophils | 0.11 | 0.09 | 0.07 | 0.04 | 0.03 | 0.02 | 0.16 | 0.15 | 0.12 | 0.10 | 0.05 | 0.01 |

| Platelets | 0.23 | 0.14 | 0.09 | 0.04 | 0.03 | 0.04 | 0.17 | 0.11 | 0.07 | 0.01 | −0.01 | 0.00 |

| Bone marrow blasts | 0.30 | 0.26 | 0.18 | 0.13 | 0.10 | 0.06 | 0.48 | 0.38 | 0.30 | 0.27 | 0.25 | 0.12 |

| Age | 0.09 | 0.11 | 0.16 | 0.19 | 0.22 | 0.26 | 0.04 | 0.02 | 0.05 | 0.03 | −0.03 | 0.16 |

| ECOG PS | 0.16 | 0.14 | 0.11 | 0.06 | 0.09 | 0.08 | 0.10 | 0.07 | 0.05 | 0.12 | 0.23 | 0.13 |

| Ferritin | 0.15 | 0.11 | 0.08 | −0.01 | 0.03 | 0.01 | 0.11 | 0.08 | −0.01 | 0.06 | 0.01 | 0.14 |

| LDH | 0.12 | 0.09 | 0.07 | 0.04 | 0.02 | 0.01 | 0.12 | 0.09 | 0.07 | −0.01 | 0.01 | 0.08 |

| β2-microglobulin | 0.14 | 0.14 | 0.14 | 0.15 | 0.11 | 0.10 | 0.02 | 0.07 | 0.04 | 0.01 | 0.00 | 0.38 |

| Bone marrow fibrosis | 0.04 | 0.04 | 0.05 | 0.04 | 0.01 | 0.02 | 0.05 | 0.03 | 0.09 | 0.00 | 0.01 | 0.05 |

| Cytogenetic categories | 0.25 | 0.14 | 0.09 | 0.06 | 0.02 | 0.00 | 0.28 | 0.19 | 0.17 | 0.14 | 0.06 | 0.06 |

| . | Dxy values for specified minimum observation times after diagnosis (months) . | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Overall survival . | Time to transformation . | |||||||||||

| 0 . | 12 . | 24 . | 36 . | 48 . | 60 . | 0 . | 12 . | 24 . | 36 . | 48 . | 60 . | |

| Scoring system | ||||||||||||

| IPSS | 0.37 | 0.30 | 0.22 | 0.16 | 0.11 | 0.07 | 0.49 | 0.38 | 0.34 | 0.26 | 0.25 | 0.20 |

| IPSS-R | 0.43 | 0.35 | 0.27 | 0.20 | 0.14 | 0.08 | 0.53 | 0.42 | 0.38 | 0.31 | 0.24 | 0.07 |

| IPSS-RA | 0.46 | 0.38 | 0.31 | 0.26 | 0.22 | 0.18 | 0.53 | 0.43 | 0.38 | 0.32 | 0.25 | 0.13 |

| WPSS | 0.44 | 0.36 | 0.29 | 0.25 | 0.18 | 0.21 | 0.59 | 0.48 | 0.39 | 0.35 | 0.30 | 0.22 |

| WPSS 2011 | 0.41 | 0.34 | 0.25 | 0.18 | 0.14 | 0.11 | 0.53 | 0.45 | 0.40 | 0.34 | 0.33 | 0.22 |

| WPSS-A | 0.44 | 0.38 | 0.31 | 0.24 | 0.20 | 0.19 | 0.52 | 0.46 | 0.40 | 0.33 | 0.27 | 0.22 |

| LR-PSS | 0.27 | 0.25 | 0.25 | 0.20 | 0.18 | 0.19 | 0.26 | 0.23 | 0.24 | 0.18 | 0.16 | 0.17 |

| LR-PSS in lower-risk patients | 0.21 | 0.20 | 0.21 | 0.18 | 0.18 | 0.18 | 0.16 | 0.18 | 0.20 | 0.14 | 0.12 | 0.10 |

| IPSS-RA in lower-risk patients | 0.28 | 0.26 | 0.25 | 0.24 | 0.23 | 0.19 | 0.37 | 0.32 | 0.28 | 0.25 | 0.21 | 0.09 |

| IPSS-RA WHO only | 0.48 | 0.40 | 0.34 | 0.28 | 0.23 | 0.20 | 0.55 | 0.47 | 0.40 | 0.35 | 0.28 | 0.15 |

| IPSS-R very-low-/low-/intermediate-risk vs high-/very- high-risk patients | 0.31 | 0.20 | 0.12 | 0.06 | 0.02 | 0.01 | 0.37 | 0.24 | 0.19 | 0.13 | 0.08 | 0.05 |

| IPSS-R-DLH ≤3.5/>3.5 | 0.33 | 0.26 | 0.19 | 0.13 | 0.07 | 0.03 | 0.44 | 0.33 | 0.29 | 0.25 | 0.19 | 0.06 |

| Predictor | ||||||||||||

| Hemoglobin | 0.21 | 0.17 | 0.16 | 0.11 | 0.08 | 0.05 | 0.16 | 0.12 | 0.12 | 0.08 | 0.06 | 0.08 |

| Neutrophils | 0.11 | 0.09 | 0.07 | 0.04 | 0.03 | 0.02 | 0.16 | 0.15 | 0.12 | 0.10 | 0.05 | 0.01 |

| Platelets | 0.23 | 0.14 | 0.09 | 0.04 | 0.03 | 0.04 | 0.17 | 0.11 | 0.07 | 0.01 | −0.01 | 0.00 |

| Bone marrow blasts | 0.30 | 0.26 | 0.18 | 0.13 | 0.10 | 0.06 | 0.48 | 0.38 | 0.30 | 0.27 | 0.25 | 0.12 |

| Age | 0.09 | 0.11 | 0.16 | 0.19 | 0.22 | 0.26 | 0.04 | 0.02 | 0.05 | 0.03 | −0.03 | 0.16 |

| ECOG PS | 0.16 | 0.14 | 0.11 | 0.06 | 0.09 | 0.08 | 0.10 | 0.07 | 0.05 | 0.12 | 0.23 | 0.13 |

| Ferritin | 0.15 | 0.11 | 0.08 | −0.01 | 0.03 | 0.01 | 0.11 | 0.08 | −0.01 | 0.06 | 0.01 | 0.14 |

| LDH | 0.12 | 0.09 | 0.07 | 0.04 | 0.02 | 0.01 | 0.12 | 0.09 | 0.07 | −0.01 | 0.01 | 0.08 |

| β2-microglobulin | 0.14 | 0.14 | 0.14 | 0.15 | 0.11 | 0.10 | 0.02 | 0.07 | 0.04 | 0.01 | 0.00 | 0.38 |

| Bone marrow fibrosis | 0.04 | 0.04 | 0.05 | 0.04 | 0.01 | 0.02 | 0.05 | 0.03 | 0.09 | 0.00 | 0.01 | 0.05 |

| Cytogenetic categories | 0.25 | 0.14 | 0.09 | 0.06 | 0.02 | 0.00 | 0.28 | 0.19 | 0.17 | 0.14 | 0.06 | 0.06 |

Dxy is a measure of correlation varying between −1 and 1, with 0 indicating no correlation, and 1 perfect concordance of prognosis and survival, respective to time to transformation (see also the short explanation of Dxy in the supplemental Data). Dxy values were tabulated conditional on minimum observation time for potential predictors and composite scores. Changes in Dxy values are consistent with hazard plots (Figures 1, 2, 4, and 5) and show similar loss of prognostic power over time. Scores with high initial prognostic power, even if decreasing over time, remained prognostically stronger than initially weaker scores. With respect to single parameters, bone marrow blast percentage is the strongest single predictor. Its predictive loss over time is consistent with most other parameters and scores. Cytogenetic pattern is of high importance at time of diagnosis with steady loss of prognostic power. Age showed growing negative impact on survival, although not on time to AML progression. For hemoglobin, neutrophils, platelets, and bone marrow blasts, the cut points were those used for the IPSS-R. Age groups were categorized as ≤55, >55 to ≤65, >65 to ≤75, >75 to ≤80, and >80 years. For serum lactate dehydrogenase (LDH) and β2-microglobulin, the upper limit of normal was the cut point; for serum ferritin, 350 ng/mL was the chosen cut point. Cytogenetic categories are those used in the IPSS-R. In lower risk denotes application of LR-PSS and IPSS-R on lower-risk patients (ie, IPSS-R very low, low, intermediate) only. IPSS-R WHO indicates that IPSS-R was exclusively applied to patients classifiable according to WHO. For dichotomization into 2 groups with higher- vs lower-risk patients, Dxy values for combined IPSS-R very-low-, low-, and intermediate-risk vs high- and very-high-risk patients and a cutoff at 3.5 IPSS-R score points (IPSS-R-DLH ≤3.5/>3.5) are tabulated.

DLH, dichotomizing lower vs higher risk; ECOG PS, Eastern Cooperative Oncology Group performance status.

Statistical methods

Time variations were described by the Cox zph test12 and by applying Dxy,13 a measure of concordance for censored data, at different observation periods (landmarks). Smoothed hazard graphs for time intervals were calculated.14 Cause-specific hazard was estimated for time to leukemic transformation and, for consistency, the related Kaplan-Meier curves are based on these cause-specific hazards (ie, they do not account for competing risks of death because the aim was to describe the change of hazard with time, not to describe the cumulative incidence of transformations).15 The potential influence of scoring weights on stability was analyzed by the creation of hypothetical time-specific scores as landmark reference scores.

The following specific hypotheses that were connected to the interpretation of results required particular methods: most scores are based on Cox models. In these models, a certain set of potential predictors is selected, and the model estimates optimal weights to form a prognostic score as a weighted sum of these potential predictors. Because the majority of events occurred early, the estimation was dominated by the initial period after diagnosis. Our question of interest was to determine whether weights, optimized for later periods would improve prediction in these later periods. Therefore, hypothetical scores were estimated for yearly landmarks for 1 to 5 years.

Because the aim of this project did not imply clinically plausible null hypotheses, formal significance tests were not considered appropriate. All analyses were performed by using the open source software R version 3.1.2,16 including the survival17 and bshazard14 packages. For an additional discussion of methods and models used, see the supplemental Data, available on the Blood Web site.

Results

Changes in the subgroup-specific hazards over time

Changes of hazards over time are shown by smoothed hazard plots (Figure 1A) using IPSS-R for WHO-classified patients to serve as an example. Smoothed hazard plots basically contain the same information as the corresponding Kaplan-Meier curves (Figure 1B). The information is displayed in a different form to help visualize changes in risk at different time intervals.

Survival of IPSS-R–classified patient subgroups using smoothed hazard plots and corresponding Kaplan-Meier curves (representative example). (A) Smoothed hazard plots more clearly demonstrate changes in risk at different time intervals than do (B) Kaplan-Meier plots. The smoothed hazard for very high risk indicates 10% monthly mortality risk in the beginning (A, top arrow) in agreement with the Kaplan-Meier curve. After approximately 30 months (A, middle arrow), 5% monthly mortality for the very-high-risk group is shown, which is not clearly visible in the Kaplan-Meier curve. The mortality risks of the remaining patients for all risk groups are similar after approximately 60 months. Note that the time scale in (B) is expanded to improve visibility of the decline in the first year. The bold black dotted line represents all patients. int, intermediate; pts, patients; vhr, very high-risk.

Survival of IPSS-R–classified patient subgroups using smoothed hazard plots and corresponding Kaplan-Meier curves (representative example). (A) Smoothed hazard plots more clearly demonstrate changes in risk at different time intervals than do (B) Kaplan-Meier plots. The smoothed hazard for very high risk indicates 10% monthly mortality risk in the beginning (A, top arrow) in agreement with the Kaplan-Meier curve. After approximately 30 months (A, middle arrow), 5% monthly mortality for the very-high-risk group is shown, which is not clearly visible in the Kaplan-Meier curve. The mortality risks of the remaining patients for all risk groups are similar after approximately 60 months. Note that the time scale in (B) is expanded to improve visibility of the decline in the first year. The bold black dotted line represents all patients. int, intermediate; pts, patients; vhr, very high-risk.

The enlarged section of the survival curves by IPSS-R (Figure 1B) shows, for example, that the curve for very high risk starts at 1.0 (ie, 100%) (at 2 months because stable disease for 2 months was one of the inclusion criteria for the IPSS-R) and declines to about 0.9 (ie, about 90%) after 1 month (ie, after 3 months from diagnosis). That means the initial mortality is 10% per month. The smoothed hazard for very high risk roughly indicates 0.1 (ie, 10%) hazard (roughly interpretable as 10% mortality per month) in the beginning (Figure 1A, top arrow). This is consistent with data represented by the Kaplan-Meier curve.

However, after 30 months, the hazard plot (Figure 1A, middle arrow) shows 0.05 (ie, a 5% monthly mortality) for the very-high-risk group. Whereas a Kaplan-Meier curve shows the estimated proportion of persons still alive at each point during follow-up time, the hazard plot shows the estimated proportion that died during a defined interval (here, 1 month), given that a person is still alive at the start of the interval of interest. The fact that the force of mortality in the very-high-risk group decreases from 10% per month to 5% per month after about 2.5 years is clearly visible in the hazard plot but not readily seen in the survival curve.

For the entire sample (black dashed line, lower arrow), the hazard plot shows 2% mortality per month after diagnosis and about 1% after 120 months. It can be seen that the mortality risks of the remaining patients for all risk groups are similar after about 60 months. The graph illustrates that similarity of risks derives mainly from a decline in the higher-risk groups (IPSS-R very high and high), whereas the mortality risk in the lower-risk groups (IPSS-R low and very low) remains essentially unchanged.

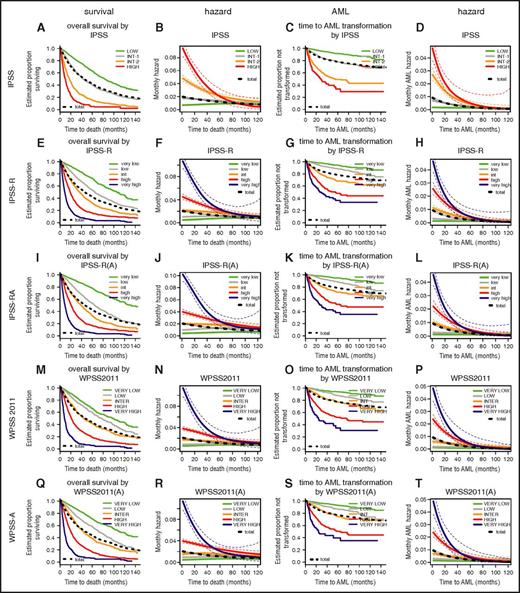

In Figure 2, the typical Kaplan-Meier plots are shown adjacent to hazard plots to facilitate comparison and to help illustrate the proportion of concerned patients at different time points. For a detailed view of individual scores, enlarged single figures are available in supplemental Figure 1A-T. The prognostic power of a score, as measured in this project by Dxy, results from the differences in the hazards of risk categories. Consequently, the attenuation of these hazards with time reduces the time-specific prognostic power of the score. The more similar the hazards of risk categories are from a specific time point onward, the less prognostically informative the original assignment is to a category.

Comparison of Kaplan-Meier curves and hazard plots for specific risk scoring systems for overall survival (columns 1 and 2) and for time to leukemic transformation (columns 3 and 4). Colors for risk groups are assigned in the order of risk from lowest to highest: green, gray, yellow, red, blue. The bold black dotted line represents all patients. For leukemic transformation, the cause-specific hazard is shown. The curves for time to leukemic transformation correspondingly are based on the cause-specific hazard (and are not cumulative incidence curves). Attenuation of hazards occurred over time after diagnosis in all scoring systems. After approximately 3.5 years, hazards in the separate risk groups became similar and essentially equivalent after 5 years. Note differing time scales for the Kaplan-Meier and hazard plots. A detailed view of individual scores shown in enlarged single figures is available in supplemental Figure 1A-T.

Comparison of Kaplan-Meier curves and hazard plots for specific risk scoring systems for overall survival (columns 1 and 2) and for time to leukemic transformation (columns 3 and 4). Colors for risk groups are assigned in the order of risk from lowest to highest: green, gray, yellow, red, blue. The bold black dotted line represents all patients. For leukemic transformation, the cause-specific hazard is shown. The curves for time to leukemic transformation correspondingly are based on the cause-specific hazard (and are not cumulative incidence curves). Attenuation of hazards occurred over time after diagnosis in all scoring systems. After approximately 3.5 years, hazards in the separate risk groups became similar and essentially equivalent after 5 years. Note differing time scales for the Kaplan-Meier and hazard plots. A detailed view of individual scores shown in enlarged single figures is available in supplemental Figure 1A-T.

Change in the subgroup-specific hazards over time for prognostic scores

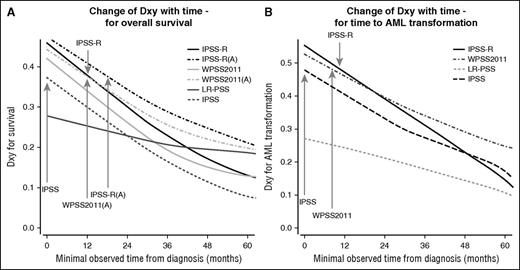

Attenuation of hazards over time was evident for all scoring systems. After approximately 3.5 years, hazards in the separate risk groups become similar and essentially equivalent after 5 years (see hazard plots in Figure 2). Almost all scores similarly lost prognostic power over time from diagnosis so that the relative ranking remained virtually unchanged (Figure 3). Scores with high initial prognostic power, even if decreasing over time, retained a greater prognostic capacity than initially weaker scores (Table 1). Compared with evaluation of survival, we observed a weaker decline of prognostic power with respect to time to transformation to AML.

Comparison of change in prognostic power for specific scoring systems. (A) Change of Dxy with time for overall survival, and (B) change of Dxy with time for time to AML transformation (based on WHO-classified patients). The figure demonstrates that nearly all scores lost prognostic power over time, with the relative ranking remaining virtually unchanged. Scores with high initial prognostic power remained prognostically stronger than initially weaker scores. The prognostic power of the IPSS-R after about 9 months and of the IPSS-RA until around 14 months remains as high as that of the original IPSS at diagnosis (gray arrows). Inclusion of patient’s age results in higher initial prognostic power and better stability in predicting survival but not for time to AML progression represented by results from IPSS-RA and WPSS-A versions which included age (panel A).

Comparison of change in prognostic power for specific scoring systems. (A) Change of Dxy with time for overall survival, and (B) change of Dxy with time for time to AML transformation (based on WHO-classified patients). The figure demonstrates that nearly all scores lost prognostic power over time, with the relative ranking remaining virtually unchanged. Scores with high initial prognostic power remained prognostically stronger than initially weaker scores. The prognostic power of the IPSS-R after about 9 months and of the IPSS-RA until around 14 months remains as high as that of the original IPSS at diagnosis (gray arrows). Inclusion of patient’s age results in higher initial prognostic power and better stability in predicting survival but not for time to AML progression represented by results from IPSS-RA and WPSS-A versions which included age (panel A).

IPSS-R vs IPSS and FAB vs WHO

Figure 3 demonstrates that the prognostic power of the IPSS-R after about 9 months remains as high as that of the original IPSS at diagnosis for both end points. Similarly, the IPSS-RA maintains a comparable power until around 14 months after diagnosis (Figure 3, gray arrows). The IPSS-R generally performs better if the patient sample is restricted to WHO-defined MDS (excluding oligoblastic AML) (Table 1, IPSS-R WHO only).

WPSS

The WPSS variants show high initial prognostic power with loss in power over time, similar to that of other scoring systems. A meaningful comparison with the IPSS-R, necessarily based on WHO-classifiable patients only, demonstrates similar initial high prognostic power and attenuation over time.

Inclusion of age in prognostic scoring systems

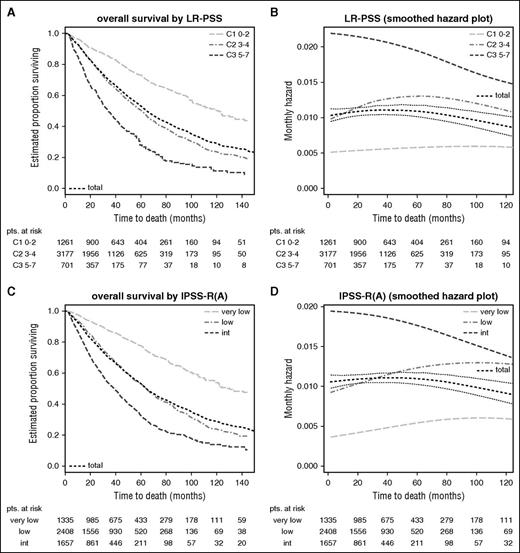

Stability of scores in lower- vs higher-risk patients

Scores applied to lower-risk patients only generally show lower prognostic power (because of less risk variation) but remain more stable over time (Table 1, LR-PSS in lower risk and IPSS in lower risk) because they are less affected by the attenuation of subgroup-specific hazards (Figure 4B,D). This is seen for both the LR-PSS, initially derived from IPSS low- and intermediate-1-risk patients,5 and for the IPSS-RA if restricted to the very-low-/low-/intermediate-risk patients according to IPSS-R (see Figure 4A,C for survival curves). This ad hoc definition was used to afford a better comparison of the predictive power of the IPSS-R and the LR-PSS. In contrast, high-/very-high-risk categories show a sharp decline in risk over time (eg, IPSS-R in Figure 2F,H) for both end points. In lower-risk MDS, a slight increase of mortality risk occurs as a result of age (Figure 4C-D), whereas no similar effect is observed for time to transformation (Figure 2K-L).

Stability of scores in lower-risk patients (Kaplan-Meier curves and hazard plots). Overall survival shown by (A,C) Kaplan-Meier curves and (B,D) hazard plots using (A,B) LR-PSS and (C,D) IPSS-RA. The figure demonstrates that scores applied to lower-risk MDS have lower prognostic power but remain more stable over time and are less affected by the attenuation of subgroup-specific hazards (B,D). This is seen for both the LR-PSS and for the IPSS-RA, with both scores restricted to the IPSS-R very-low-/low-/intermediate-risk patients (panels A and C, survival curves). (C-D) An increase of mortality risk related to age is shown. LR-PSS categories: C1, score 0 to 2; C2, score 3 to 4; C3, score 5 to 7. Note differing time scales for the Kaplan-Meier and hazard plots.

Stability of scores in lower-risk patients (Kaplan-Meier curves and hazard plots). Overall survival shown by (A,C) Kaplan-Meier curves and (B,D) hazard plots using (A,B) LR-PSS and (C,D) IPSS-RA. The figure demonstrates that scores applied to lower-risk MDS have lower prognostic power but remain more stable over time and are less affected by the attenuation of subgroup-specific hazards (B,D). This is seen for both the LR-PSS and for the IPSS-RA, with both scores restricted to the IPSS-R very-low-/low-/intermediate-risk patients (panels A and C, survival curves). (C-D) An increase of mortality risk related to age is shown. LR-PSS categories: C1, score 0 to 2; C2, score 3 to 4; C3, score 5 to 7. Note differing time scales for the Kaplan-Meier and hazard plots.

Decline of prognostic power over time in potential prognostic variables

Decline of prognostic power for single-potential prognostic variables as measured by Dxy is tabulated in Table 1. Bone marrow blast percentage is the strongest single predictor. Its predictive loss over time is in line with most other parameters and scores. Cytogenetic pattern is of high importance at time of diagnosis with steady loss of prognostic power. β2-microglobulin and performance status (available only for a subset of patients) seem to have moderate but stable influence on survival and increasing influence on time to progression. As a plausibility check of the analysis, age conversely showed growing negative impact on survival although not on time to AML progression.

Improvement by hypothetical time-specific scores

Given that most potential prognostic variables exhibit loss of prognostic power over time, we investigated the effect of assigning different weights to score-constituting components for time-specific Cox models (see supplemental Figure 2A-B for weights and supplemental Figure 2C-D for resulting curves). Dxy values for hypothetical scores for yearly landmarks for 1 to 5 years (overall survival) are shown in supplemental Table 1. These landmark reference scores do not show higher prognostic power for target times but rather attenuation of prognostic power.

Consequences for dichotomization into lower-risk vs higher-risk MDS

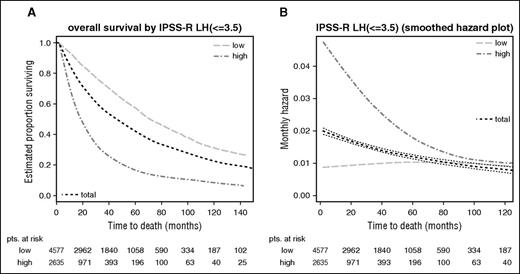

On the basis of both prognostic power and the differing declines of hazards in IPSS-R categories, the optimal dichotomization into just 2 risk categories (as are usually used in clinical practice)—lower-risk vs higher-risk MDS patients—is the division obtained by using IPSS-R scores of ≤3.5 vs >3.5 points as a cutoff.

At time of diagnosis, the Dxy for dichotomization at this cutoff is 0.33 for overall survival and 0.44 for time to transformation, respectively, vs 0.31 and 0.37 if cutting at IPSS-R very-low-/low-/intermediate- vs high-/very-high-risk groups. Loss of prognostic power at different time points for both approaches can be seen in Table 1. Figure 5, which indicates the respective Kaplan-Meier curves for overall survival (A) and respective hazards plots (B) for patients with an IPSS-R score of ≤3.5 vs >3.5 points, regarding prognostic power also clearly demonstrates this dichotomy, showing good separation of patients with initially higher but declining risk vs patients with constant lower risk. The lower-risk group proposed now includes a smaller proportion of patients than the original IPSS lower-risk group, consequently assigning more patients to the higher-risk group.

Dichotomized separation of lower- vs higher-risk MDS patients in IPSS-R–stratified patients. Kaplan-Meier curves for (A) overall survival and (B) respective hazards plots for patients with an IPSS-R score of ≤3.5 vs >3.5 points which yields the best results regarding prognostic power. Panels A and B show a good separation of patients with initially higher but declining risk vs patients with constant lower risk. Note differing time scales for the Kaplan-Meier and hazard plots.

Dichotomized separation of lower- vs higher-risk MDS patients in IPSS-R–stratified patients. Kaplan-Meier curves for (A) overall survival and (B) respective hazards plots for patients with an IPSS-R score of ≤3.5 vs >3.5 points which yields the best results regarding prognostic power. Panels A and B show a good separation of patients with initially higher but declining risk vs patients with constant lower risk. Note differing time scales for the Kaplan-Meier and hazard plots.

Discussion

MDSs have been described as a spectrum of dynamic disorders in which clonal evolution identified at a cytogenetic and molecular level may trigger progression.18,19 Conversely, time of disease progression may be heterogeneous.1 Risk for progression and survival may be estimated by risk-based categorization, which is generally performed at the time of diagnosis.2 Recently, prognostic scoring systems have been substantially improved by refined inclusion and by the addition of parameters based on the use of larger, comprehensive databases. In addition, the use of prognostic scoring systems for treatment decisions is recommended in disease management guidelines.20,21 However, data regarding the stability of risk scores over time are scarce.3

When using prognostic risk scoring systems in daily clinical practice, we implicitly assume that the risk within a specific risk category remains constant over the entire course of the disease. However, this study showed that all the scoring systems we evaluated for MDS risk categories discriminated better at time of diagnosis than they did at later time points (Figure 3; Table 1). In particular, our data demonstrated significant time-dependent changes in the risk for both overall survival and leukemic transformation during elapsing time, which differed for specified patient populations (Figure 2). For higher-risk patients, the mortality risk declined more sharply over time approaching that observed in lower-risk MDS, whereas for lower-risk patients, the mortality risk remained essentially unchanged during follow-up.

One potential explanation for this finding is that the risk for AML transformation, which has a greater impact in the high-risk subset of patients, decreases more dramatically compared with overall survival (Figure 2F,H). In contrast, age-related risk of death, which has a more pronounced effect on mortality in lower-risk patients, increased with time (Table 1). Risk attenuation is at least partially caused by selective loss of higher-risk patients over time. This loss may be an event such as death or leukemic transformation. In addition, a selection bias cannot be ruled out: the International Working Group for Prognosis in MDS data set used for developing the IPSS-R includes only untreated patients, and it is likely that a greater proportion of higher-risk compared with lower-risk patients is actively treated with passing time from diagnosis, and they are thus selectively excluded from this study. Because changes in risk over time may stem from disease-specific and also from patient-related factors such as comorbidities, loss of prognostic power over time may be not the result of a poor quality of the scoring systems analyzed but to inherent survival dynamics of the MDS patient population.

Theoretically, loss of prognostic power could also result from suboptimal weights of score components. We ruled out this possibility by providing hypothetical scores that were optimized for later time intervals comparable to landmark analyses and observed that these hypothetical scores lost prognostic power in a comparable fashion (supplemental Table 1). In addition, because single parameters decline to a similar extent, better weighting of these variables at time of diagnosis did not mitigate the decline of prognostic power of scores over time (Table 1). Data using somatic mutational molecular parameters with potential for prognosis18,21,22 will be of much interest for future analyses. The potential stability of such mutations over time requires further study.

Because there is a loss of stability in prognostic scores, the question of the best approach for patient reevaluation arises. Conventionally, the time of first bone marrow examination with features defining MDS is accepted as the time of diagnosis.23 On the assumption that the impact of features changes very little, and remembering different stability of hazards in different risk categories, scoring systems such as the IPSS-R may be used for reevaluation after diagnosis at time points during which clinically meaningful conclusions can still be drawn from risk score categories. Reevaluation could be done at specific intervals established according to clinical needs (eg, every 6 months for higher-risk patients and at longer intervals for lower-risk patients).

Our data on the differential development of risks for the single-score subgroups suggest, for the first time, that it would be reasonable to divide lower-risk and higher-risk MDS patients into 2 groups based on the IPSS-R, a fact that may be valuable for designing clinical trials and for patient management. As described, lower-risk MDS patients have virtually constant risk for both end points, whereas in higher-risk patients, risk diminishes substantially over time. If the aim is optimal prognostic separation of lower-risk vs higher-risk patients, then a dichotomization based on 3.5 scoring points of the IPSS-R raw score (ie, ≤3.5 vs >3.5) yielded the best results. This also best represents the different changes in risk categories over time (Figure 5; Table 1). In particular, all patients scoring >3.5 points fit well into the higher-risk group. The proportion of lower-risk patients using this dichotomization is smaller compared with the lower-risk group of the original IPSS, which was heterogeneous and contained patients with higher risk.

Our results serve to underscore the ongoing processes that occur during the course of the disease and should help guide clinical decisions in MDS. One interpretation relates to a new perception of higher- and lower-risk MDS in which lower-risk patient subgroups may be better described as a group of patients with constant lower risk (risk remaining the same over time) whereas the term “initially higher risk” MDS (but with decreasing risk over time) fits higher-risk subgroups better. Although our data are derived from untreated MDS patients, this is a representative patient cohort because the majority of MDS patients are still provided with supportive care without disease-modifying treatment.24,25 Future analysis of patients receiving treatment and comparison with the data presented herein are planned and may indicate the impact of therapy.

The following clinical recommendations can be derived from our data: if a patient is initially categorized as high risk, options for disease-modifying treatment should be considered immediately and, if deemed appropriate, a decision for treatment should be made as early as possible. If in contrast, as a result of comorbidities, decreased performance status, clinical stability, or other reasons, a high-risk patient does not receive disease-modifying treatment at time of diagnosis but remains in stable condition for a prolonged period, reevaluation for a specific treatment should be reconsidered at that time. For lower-risk patients, it should be noted that risk remains relatively constant over time; therefore, surveillance and ongoing reevaluation should be maintained long term.

In conclusion, our data describe the change of risk within prognostic score categories over time in MDS and its effects on the construction and interpretation of prognostic scoring systems. Clinicians should be aware of these facts when assessing patients after time intervals and when making treatment decisions. This study clearly demonstrates that a cutoff point of 3.5 in the IPSS-R scoring system is the best for segregating MDS patients into 2 risk groups—lower risk and higher risk—for therapeutic purposes, although a loss of prognostic power compared with the use of raw score data or the 5 IPSS-R categories approach should be noted. Because the statistical tools used in our analysis may be applied to other prognostic scoring systems, it will be of interest to determine whether similar changes of risk over time are observed in other disease entities.

The online version of this article contains a data supplement.

The publication costs of this article were defrayed in part by page charge payment. Therefore, and solely to indicate this fact, this article is hereby marked “advertisement” in accordance with 18 USC section 1734.

Acknowledgment

This work was in part supported by the MDS Foundation, Inc.

Authorship

Contribution: M.P. designed, performed, and coordinated the research, collected, contributed, analyzed, and interpreted the data, and wrote the manuscript; H.T. designed and performed the research, performed the statistical analyses, produced the figures, and edited the manuscript; P.L.G., G.S., and A.A.v.d.L collected, contributed, analyzed, and interpreted data and edited the manuscrip; J.M.B., G.G.-M., F.S., D.B., P.F., A.L., J.C., M.L., J.M., S.M.M.M., S.M.-S., Y.M., M.P., M.A.S., W.R.S., J.S., R.S., S.T., P.V., T.V., F.D., H.K., A.K., L.M., M.C., D.H., and U.G. collected and contributed data, analyzed the results, and critically revised the paper; and C.F., M.M.L.B., and M.L.S. analyzed and interpreted the data and critically revised the paper.

Conflict-of-interest disclosure: The authors declare no competing financial interests.

Correspondence: Michael Pfeilstöcker, 3rd Medical Department Hanusch Hospital, H. Collinstr 30, 1140 Vienna, Austria; e-mail: michael.pfeilstoecker@wgkk.at.